As artificial intelligence (AI) continues to revolutionize industries, the emergence of generative AI, particularly large language models (LLMs), has opened new possibilities for creating content, automating tasks, and enhancing user interactions. These models rely heavily on prompts — specific inputs that guide the AI to produce desired outputs. This has led to the rise of "PromptOps," a specialized approach to managing the operational aspects of prompt-based AI systems.

PromptOps sits at the intersection of generative AI and DevOps, merging the principles of continuous integration, delivery, and monitoring from DevOps with the unique challenges and opportunities of working with generative models. As organizations increasingly deploy AI systems in production environments, PromptOps offers a structured methodology for optimizing prompt design, version control, real-time monitoring, and automated scaling, ensuring that AI models perform consistently and effectively across various applications.

What is Prompt-Ops ?

PromptOps refers to the systematic approach of managing, optimizing, and deploying AI prompts within a DevOps framework. As prompts are crucial inputs that influence the output quality of generative AI models, PromptOps integrates these prompts into the DevOps pipeline, allowing for automated testing, version control, and continuous refinement. This approach encourages collaboration between AI developers, researchers, and operators, ensuring that prompts are consistently improved based on performance metrics and feedback. By incorporating automation, monitoring, and compliance checks, PromptOps makes sure that AI prompts are handled as carefully and efficiently as software code, resulting in more reliable and better AI results

Why does Prompt-Ops matters ?

PromptOps matters because it ensures that the prompts used to interact with AI models are managed effectively, which directly affects the quality of the AI's outputs. Here's why it's important:

- Consistency and Quality: Properly managing prompts helps maintain consistent and high-quality outputs from AI models. By refining prompts systematically, you ensure that the AI generates more accurate and relevant results.

- Efficiency: Integrating prompt management into DevOps pipelines allows for automation in testing and deploying prompts. This speeds up the process of improving AI performance and reduces manual effort.

- Collaboration: It fosters better collaboration between AI developers and operators. By treating prompts like code, teams can work together more effectively to optimize and troubleshoot AI models.

- Adaptability: As AI models evolve and new requirements emerge, PromptOps ensures that prompts can be quickly updated and adapted, keeping the AI system responsive and up-to-date.

- Monitoring and Feedback: It allows for continuous monitoring and feedback on prompt performance. This ongoing evaluation helps in making data-driven improvements to the prompts and, consequently, the AI outputs.

- Compliance and Security: Managing prompts with the same effectiveness as software code helps ensure they adhere to ethical and security standards, which is crucial when dealing with sensitive or impactful AI applications.

What are the Use-cases of Prompt-Ops ?

- Customer Support Automation:

- Use Case: Deploying AI chatbots or virtual assistants that utilize prompt-based systems to handle customer inquiries, provide support, and resolve issues.

- PromptOps Application: Crafting and managing prompts that guide the AI to deliver accurate and contextually relevant responses, monitoring performance to ensure customer satisfaction, and refining prompts based on user feedback.

2. Content Generation:

- Use Case: Using generative AI to create various types of content, such as marketing copy, articles, or product descriptions.

- PromptOps Application: Designing prompts that generate high-quality and coherent content, integrating prompt management into CI/CD pipelines for content updates, and monitoring output quality to maintain consistency and relevance.

3. Personalized Recommendations:

- Use Case: Implementing AI systems that provide personalized recommendations for products, services, or content based on user preferences and behavior.

- PromptOps Application: Developing and optimizing prompts that tailor recommendations to individual users, automating updates and scaling to handle large volumes of user interactions, and using feedback to enhance recommendation accuracy.

4. Dynamic Report Generation:

- Use Case: Automating the generation of reports and summaries based on user inputs or data analysis, such as business intelligence reports or research summaries.

- PromptOps Application: Creating prompts that extract and present relevant information effectively, managing different versions of report templates, and ensuring that the system scales to meet varying reporting demands.

5. Interactive Learning and Training:

- Use Case: Building AI-driven educational tools and training modules that interact with users through prompts to facilitate learning and skill development.

- PromptOps Application: Designing prompts that guide learners through interactive exercises, incorporating feedback to adjust prompts and improve the learning experience, and automating updates to educational content based on user progress and feedback.

6. Code Generation and Assistance:

- Use Case: Using AI models to assist in code generation, debugging, and providing coding suggestions to developers.

- PromptOps Application: Crafting prompts that effectively guide the AI in generating useful code snippets or troubleshooting advice, integrating these prompts into development workflows, and continuously refining them based on developer feedback and performance metrics.

7. Content Moderation:

- Use Case: Employing AI to automatically review and moderate user-generated content, such as comments or social media posts, to ensure adherence to community guidelines.

- PromptOps Application: Designing prompts that effectively identify inappropriate or harmful content, automating the moderation process, and adapting prompts based on evolving content guidelines and user feedback.

8. Market Analysis and Trend Identification:

- Use Case: Leveraging AI to analyze market data, identify trends, and generate insights for strategic decision-making.

- PromptOps Application: Developing prompts that extract and interpret relevant data insights, automating the analysis and reporting process, and refining prompts based on the evolving nature of market trends and data.

How does Prompt-Ops work ?

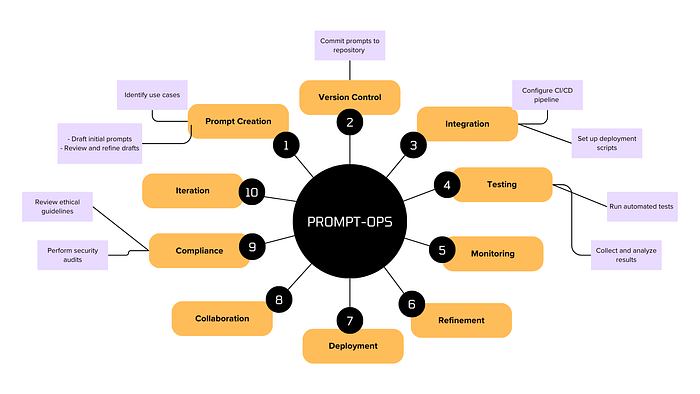

PromptOps involves systematically managing AI prompts to ensure high-quality outputs. It begins with creating and refining prompts, integrates them into the DevOps pipeline for automated testing and deployment, and monitors their performance. By using version control, automation, and continuous feedback, PromptOps facilitates efficient prompt management, collaboration among teams, and adherence to compliance standards, leading to reliable and effective AI results.

1. Prompt Creation : Develop initial prompts based on requirements and objectives. This includes identifying use cases, drafting initial prompts, and reviewing and refining drafts.

2. Version Control: Manage and track changes to prompts using version control systems (like Git). This involves committing prompts to a repository, tracking changes, and reviewing pull requests.

3. Integration: Integrate prompts into the DevOps pipeline for automated testing and deployment. Configure the CI/CD pipeline, integrate prompt tests, and set up deployment scripts.

4. Testing: Automatically test prompts to evaluate their effectiveness and impact on AI outputs. Define test cases, run automated tests, and collect and analyze results.

5. Monitoring: Monitor performance metrics and feedback to assess prompt quality and accuracy. Set up monitoring tools, collect performance data, and analyze user feedback.

6. Refinement: Refine and optimize prompts based on test results and monitoring data. Review test results, make adjustments to prompts, and test the refined prompts.

7. Deployment: Deploy updated prompts into production environments. Deploy prompts via the pipeline, verify deployment, and monitor post-deployment performance.

8. Collaboration: Facilitate collaboration among AI developers, researchers, and operators to enhance prompt quality. Conduct team meetings, share feedback, and update documentation.

9. Compliance: Ensure prompts meet ethical and security standards through compliance checks. Review ethical guidelines, perform security audits, and address compliance issues.

10. Iteration: Continuously iterate on prompts based on new requirements and feedback to keep AI models up-to-date. Gather new requirements, implement changes, and repeat testing and refinement.

What are the key Components of Prompt-Ops ?

- Prompt Engineering:

- Design and Refinement: Crafting and refining prompts to ensure they effectively elicit desired responses from the AI model. This involves understanding how different prompts influence model behavior and optimizing them for specific use cases.

- Template Management: Creating and maintaining a library of reusable prompt templates to streamline prompt creation and ensure consistency across applications.

2. Version Control:

- Prompt Versioning: Managing different versions of prompts to track changes and updates over time. This helps in understanding the impact of modifications and reverting to previous versions if necessary.

- Change Tracking: Using tools like Git to document changes in prompts, allowing for detailed history and collaboration among team members.

3. Monitoring and Evaluation:

- Real-Time Monitoring: Implementing systems to continuously track the performance of prompt-based models, including metrics such as response accuracy, latency, and user satisfaction.

- Quality Assurance: Regularly evaluating model outputs against predefined quality standards to ensure they meet the required criteria.

4. Automation:

- CI/CD Integration: Incorporating prompt management into continuous integration and continuous deployment (CI/CD) pipelines to automate updates and deployments. This ensures that changes are tested and deployed systematically.

- Autoscaling: Leveraging cloud infrastructure to automatically scale resources based on demand, ensuring that the AI system can handle varying loads efficiently.

5. Intent Matching:

- User Input Analysis: Developing techniques to accurately interpret and match user inputs with the appropriate prompts. This helps in aligning the AI's responses with user expectations and needs.

- Feedback Loops: Using user feedback to refine and improve prompts, enhancing the system's ability to deliver relevant and accurate outputs.

6. Security and Compliance:

- Data Privacy: Ensuring that prompts and model interactions comply with data privacy regulations and standards. This involves managing sensitive information securely and implementing access controls.

- Audit Trails: Maintaining detailed records of prompt usage and modifications for auditing purposes and ensuring transparency.

7. Collaboration and Communication:

- Team Coordination: Facilitating collaboration among cross-functional teams, including data scientists, engineers, and product managers, to align on prompt design and deployment strategies.

- Documentation: Creating comprehensive documentation for prompts, processes, and best practices to ensure clarity and consistency across the team.

8. Performance Tuning:

- Optimization: Continuously tuning prompts and model parameters to enhance performance and efficiency. This may involve adjusting prompt structures or incorporating new techniques to improve output quality.

- Benchmarking: Conducting performance benchmarks to compare different prompts and approaches, identifying the most effective strategies for various use cases.

Challenges and Considerations of using Prompt-Ops

- Complexity in Prompt Management:

- Challenge: Managing and refining prompts can be complex due to the nuanced nature of language and the varied contexts in which prompts are used. Ensuring that prompts consistently produce the desired outputs across different scenarios requires careful design and iteration.

- Consideration: Developing a robust system for prompt versioning and tracking changes is essential to manage this complexity and maintain consistency.

2. Performance Variability:

- Challenge: Generative AI models can produce variable outputs even with similar prompts, making it challenging to ensure consistent performance and quality. Factors such as context, phrasing, and model state can affect results.

- Consideration: Implementing comprehensive monitoring and quality assurance processes helps to identify and address performance issues, ensuring that outputs meet desired standards.

3. Scalability Concerns:

- Challenge: Scaling prompt-based AI systems to handle large volumes of requests or varying workloads can be difficult. Ensuring that the system remains efficient and responsive under different conditions is a key concern.

- Consideration: Leveraging automation and cloud-based autoscaling solutions can help manage resource allocation and maintain performance as demand fluctuates.

4. Ethical and Bias Issues:

- Challenge: Prompt-based AI models may inadvertently generate biased or unethical outputs, depending on the prompts and training data. Ensuring that prompts do not lead to harmful or biased responses is crucial.

- Consideration: Regularly reviewing and refining prompts, along with incorporating diverse perspectives and ethical guidelines, can help mitigate bias and promote responsible AI usage.

5. Integration and Collaboration:

- Challenge: Integrating PromptOps with existing DevOps practices and ensuring effective collaboration among team members can be challenging. Aligning prompt engineering with deployment processes requires coordination across various roles.

- Consideration: Establishing clear communication channels, maintaining thorough documentation, and fostering collaboration between data scientists, engineers, and other stakeholders are essential for successful integration and operation of PromptOps.

Technologies and tools which are commonly used in Prompt-Ops

- Prompt Engineering Platforms:

- OpenAI Platform: Provides tools for designing, testing, and deploying prompts with models like GPT-4.

- Hugging Face Transformers: A library that facilitates the use of various transformer models and supports prompt engineering.

2. Version Control Systems:

- Git: Essential for managing changes in prompt designs, tracking revisions, and collaborating with team members.

- GitHub/GitLab/Bitbucket: Platforms that provide version control services and collaborative features for managing prompt-related code and documentation.

3. CI/CD Pipelines:

- Jenkins: Used for automating the integration and deployment of prompt-based models, ensuring that updates are systematically tested and deployed.

- GitHub Actions: Provides automation capabilities for CI/CD workflows directly integrated with GitHub repositories.

- GitLab CI/CD: A comprehensive CI/CD tool integrated with GitLab for automating the development lifecycle.

4. Monitoring and Logging Tools:

- Prometheus: Monitors metrics and performance of AI models, including prompt-related metrics like response accuracy and latency.

- Grafana: Visualizes metrics collected by Prometheus, helping track and analyze the performance of prompt-based systems.

- ELK Stack (Elasticsearch, Logstash, Kibana): Provides logging and visualization capabilities to monitor and analyze logs related to AI prompt operations.

5. Automation and Orchestration:

- Kubernetes: Manages containerized applications, including those running AI models, and helps in scaling and orchestrating prompt-based deployments.

- Terraform: Used for infrastructure as code, enabling automated provisioning and management of the underlying infrastructure for AI systems.

6. Testing and Validation Tools:

- Postman: Useful for testing API endpoints that interact with AI models and ensuring that prompt responses are consistent and accurate.

- pytest: A testing framework for Python that can be used to validate prompt-based functionalities and model outputs.

7. Data Privacy and Security Tools:

- AWS Identity and Access Management (IAM): Manages access control and permissions for resources used in prompt-based AI systems.

- HashiCorp Vault: Provides secure storage and management of sensitive information, including API keys and prompt data.

8. Model Management Platforms:

- MLflow: Tracks and manages machine learning models, including their versions and experiments related to prompt engineering.

- Weights & Biases: Facilitates tracking, visualization, and collaboration on machine learning experiments, including those involving prompts.

9. Feedback and Analytics Tools:

- Google Analytics: Tracks user interactions and provides insights into how users engage with prompt-based systems.

- Mixpanel: Offers advanced analytics for understanding user behavior and feedback related to AI-driven interactions.

10. Collaboration and Documentation:

- Confluence: A collaboration tool for documenting prompt designs, best practices, and operational procedures.

- Slack/Microsoft Teams: Communication platforms that support collaboration among team members working on PromptOps, facilitating real-time discussions and updates.

Conclusion

PromptOps represents a critical advancement in integrating generative AI with DevOps practices, focusing on the systematic management of AI prompts to optimize performance and reliability. By incorporating prompt handling into the DevOps pipeline, PromptOps ensures that prompts — essential for guiding AI outputs — are effectively managed, tested, and refined.

PromptOps also leverages advanced technologies and tools, including prompt engineering platforms, CI/CD pipelines, and monitoring solutions, to create a structured methodology for managing prompt-based AI systems. This approach not only optimizes AI performance but also aligns with best practices in software development, paving the way for more reliable and efficient AI operations.