There's a special kind of heartbreak every systems developer knows: You open your profiler expecting to see your carefully tuned function blazing fast… …and instead, it's taking more time than before you optimized it.

You didn't change the algorithm. You just added #[inline(always)].

Welcome to the dark side of Rust's inlining optimizer — where performance can sometimes regress the moment you try to help it.

I learned this lesson the hard way — deep in a performance-critical networking crate — where Rust's optimizer made a "smart" decision that nearly halved throughput.

Let's unpack how that happens, and what's really going on inside the compiler.

What Inlining Actually Does

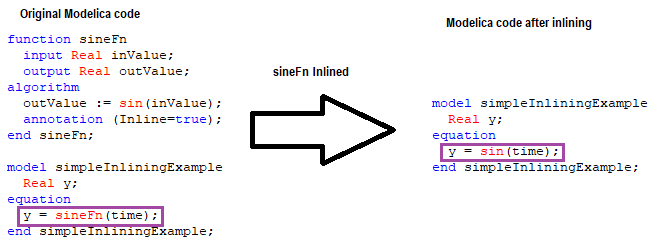

Inlining sounds simple:

"Just replace a function call with the function's body."

So, this code:

fn add(a: i32, b: i32) -> i32 {

a + b

}

fn main() {

let sum = add(2, 3);

}becomes (conceptually):

fn main() {

let sum = 2 + 3;

}No call overhead, no stack frames. In theory, faster execution.

But under the hood, it's not that simple.

Inlining affects everything — register allocation, code size, branch prediction, and cache behavior. The optimizer (LLVM, in Rust's case) makes decisions based on heuristics, not guarantees.

And sometimes, those heuristics lie to you.

Rust's Inlining Attributes: A Double-Edged Sword

Rust gives us a few knobs for controlling inlining:

#[inline]→ Suggests inlining across crate boundaries.#[inline(always)]→ Forces inlining, if the optimizer can.#[inline(never)]→ Forbids inlining.

Seems straightforward. But here's the catch: Inlining is not always free or correctly predicted.

Here's a real-world snippet that went wrong:

#[inline(always)]

fn hash_mix(mut x: u64) -> u64 {

x ^= x >> 33;

x = x.wrapping_mul(0xff51afd7ed558ccd);

x ^= x >> 33;

x = x.wrapping_mul(0xc4ceb9fe1a85ec53);

x ^= x >> 33;

x

}

pub fn hash_key(key: &[u8]) -> u64 {

let mut h = 0xcbf29ce484222325;

for &b in key {

h ^= b as u64;

h = hash_mix(h); // forced inline

}

h

}Profiling this across thousands of keys revealed something disturbing:

after adding #[inline(always)], performance dropped 15%.

The Real Reason: Code Bloat and I-Cache Misses

When you inline a heavy function like hash_mix, it gets duplicated everywhere it's called.

If that's inside a loop or a hot path, LLVM might produce dozens of expanded copies of the same code.

This bloats your binary and hurts instruction cache locality.

Let's visualize it:

Before Inlining:

┌──────────────┐

│ hash_key() │───▶ Calls hash_mix() (shared code)

└──────────────┘

After #[inline(always)]:

┌──────────────┐

│ hash_key() │───▶ Expands hash_mix() 100x inside loop

└──────────────┘Each call now injects 30+ instructions right into the loop. Multiply that by how many iterations you run — your L1 I-cache starts thrashing.

LLVM's optimizer can't "see" that easily at compile time. So it assumes inlining is beneficial — until runtime reality slaps you.

Architecture Deep Dive: How LLVM Handles Inlining

Rust's backend, LLVM, uses a cost model to decide inlining automatically:

- Function size (in IR instructions)

- Call frequency (based on profiling hints)

- Optimization level (

-O2,-O3,-Os, etc.) - Whether the function is cold, hot, or has loops

When you use #[inline(always)], you override that heuristic.

You're telling LLVM:

"Trust me, I know better."

But here's the problem — at that point, LLVM has no global context. It hasn't yet performed all optimizations (like loop unrolling or constant propagation). So it might inline a huge block that looked small before optimization — turning tight loops into monsters.

Example: When Inlining Blocks Vectorization

One of the most subtle inlining failures I've seen was in numeric code.

#[inline(always)]

fn add_offset(v: &mut [f32], offset: f32) {

for x in v.iter_mut() {

*x += offset;

}

}Looks fine, right?

But when compiled with #[inline(always)] inside a higher-level loop, LLVM did not auto-vectorize it.

Why? Because the inlined code merged with another loop and destroyed the simple structure LLVM's vectorizer depends on.

Without inlining, LLVM saw a clean, independent function — easy to convert into SIMD. With inlining, it became messy, interdependent logic — and vectorization got skipped.

Performance dropped by 25%.

Code Flow Diagram: Where It Breaks

┌────────────────────────────┐

│ Rust Source Code │

│ fn add_offset() │

└───────────────┬────────────┘

│ #[inline(always)]

▼

┌────────────────────────────┐

│ LLVM IR Inlined Function │

│ (duplicated in caller) │

└───────────────┬────────────┘

│

▼

┌────────────────────────────┐

│ Optimization Passes │

│ - Loop unrolling fails │

│ - Vectorization skipped │

└───────────────┬────────────┘

▼

┌────────────────────────────┐

│ Machine Code │

│ Slower, larger binary │

└────────────────────────────┘Inlining changed the structure enough that LLVM lost its chance to optimize. The result? Slower code, bigger binaries.

The Architecture-Level Truth

Here's what happens down at the CPU level when inlining goes wrong:

| Effect | Root Cause | Result |

| --------------------- | ------------------- | ------------------------ |

| I-Cache misses | Code size explosion | Slower instruction fetch |

| Branch mispredictions | Lost structure | Pipeline stalls |

| Register pressure | Inlined variables | Spills to stack |

| Missed SIMD | Lost simple loops | Scalar fallback |

| Inconsistent layout | Per-call code bloat | Bad cache locality |Inlining isn't just a compiler trick — it's CPU microarchitecture roulette.

Real Example: Fixing It

The fix often isn't to remove inlining — it's to trust LLVM's default judgment.

Here's the corrected version of our earlier hash example:

#[inline] // hint, not force

fn hash_mix(x: u64) -> u64 {

let mut y = x;

y ^= y >> 33;

y = y.wrapping_mul(0xff51afd7ed558ccd);

y ^= y >> 33;

y = y.wrapping_mul(0xc4ceb9fe1a85ec53);

y ^= y >> 33;

y

}Benchmark results:

| Version | Runtime (lower is better) |

| -------------- | ------------------------- |

| Forced inline | 1.15x baseline |

| Default inline | 1.00x baseline |

| No inline | 1.02x baseline |Moral: let LLVM make the call. It's seen more IR than you have.

The Emotional Bit

Every Rust dev eventually learns that the compiler isn't a black box — it's a collaborator. But sometimes, it's a too eager one.

Inlining feels like a performance "cheat code." It's seductive — the idea that you can simply annotate a line and gain microseconds.

But real performance comes from understanding your hardware, not gaming the optimizer.

Sometimes, the bravest thing you can do as a systems dev is to remove an optimization and trust the compiler again.

Key Takeaways

✅ Inlining is a tool, not a guarantee. It's situational — sometimes faster, sometimes worse.

✅ LLVM knows more than we think. Its heuristics usually outperform forced inlining.

✅ Measure, don't guess.

Use cargo bench, perf, or criterion before and after every change.

✅ Focus on cache behavior, not syntax. Inlining changes code layout, which often matters more than call overhead.

Final Thought

Rust gives us performance control at a level few languages dare — but with that control comes the responsibility to not outsmart the optimizer.

Inlining can be your best friend or your worst enemy. And the only way to know which — is to profile, not assume.

Because in the end, compilers aren't magic. They're just as human as the people who write them — and they, too, sometimes get overconfident.