Have you ever wondered why responses from large language models (LLMs) can be so varied, even when asked the same question multiple times? Today, I am going to throw some light on this phenomenon and also explain the impact of temperature and top_p inference parameters on adjusting this non-determinism. This is based on my own research and experiments. I invite your feedback and corrections as needed!

LLMs are designed to emulate human-like conversation, complete with the inherent unpredictability. Just like us, these models aren't deterministic. But how exactly does this work?

The Transformer Architecture at Work

LLMs, built on the transformer architecture, function as sequence-to-sequence models. Given an input sequence, they predict the next token in an autoregressive manner. Essentially, for each step, the model generates a set of probable next tokens. Let's illustrate this with an example.

Question: What are the symptoms of COVID?

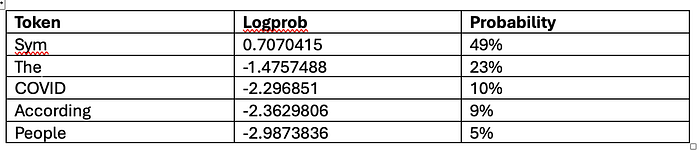

Imagine the model generates the following probable tokens(example provided for the first token only) with their respective probabilities:

I have converted the log probabilities to probabilities using probability = math.exp(logprob)

If the model always chose the highest probability token, it would be using a greedy sampling method. However, to introduce variability and mimic human-like responses, LLMs employ a different sampling strategy. Here, "Sym" might be picked 49% of the time, "The" 23% of the time, and so on, leading to non-deterministic outputs.

The role of top_p

Top_p, or nucleus sampling, refines this process by narrowing the pool of tokens considered for sampling. For instance, with top_p set to 0.5, only "Sym" and "The" would be in the running for selection, excluding the less probable tokens like "COVID," "According," and "People."

The Influence of Temperature

Temperature adjusts the model's sampling strategy further. A lower temperature increases the difference between token probabilities, amplifying the likelihood of selecting the highest probability token. This can make the output more predictable and focused, whereas a higher temperature adds more randomness, making responses more creative and diverse.

Bringing It All Together

Understanding and tweaking these parameters-temperature and top_p-can help you reduce the non-determinism, but will not completely eliminate it.

I hope this deep dive helps demystify the impact of top_p and temperature on the LLM outputs.

You can use the below code to try it out.

import os

from dotenv import load_dotenv

from openai import OpenAI

# Set your OpenAI API key

load_dotenv()

OPENAI_API_KEY = os.environ.get('OPENAI_API_KEY')

client = OpenAI()

# Example query

query = "What are the symptoms of COVID-19?"

# Generate the initial response and extract logits

response = client.chat.completions.create(

model="gpt-4",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": query}

],

max_tokens=10,

logprobs=True , # Include logits,

top_logprobs=5,

top_p=0

)

print(response)

choices = response.choices

for choice in choices:

tokens = choice.logprobs.content

for token in tokens:

print(token)