Kafka replication is that backup plan. It ensures your data doesn't disappear into the ether when something breaks — and something will break.

This post isn't going to wave hands or throw jargon around for the sake of sounding smart. We'll take a plainspoken approach to understanding Kafka replication, one that sticks, with just enough grit to prepare you for real-world failures and trade-offs.

Why Replication Exists in Kafka

Imagine you're handling bookings for a travel site using Kafka. You store each user booking as a message in a Kafka topic. That topic is partitioned, and each partition is stored on a broker.

What if the broker storing one of the partitions dies?

Well, you lose access to the data in that partition — unless you've replicated it.

Kafka replication ensures that every partition has redundant copies across different brokers. If one broker fails, Kafka can elect another replica to lead and continue processing, without downtime or data loss.

Data Distribution and Replication: The Basics

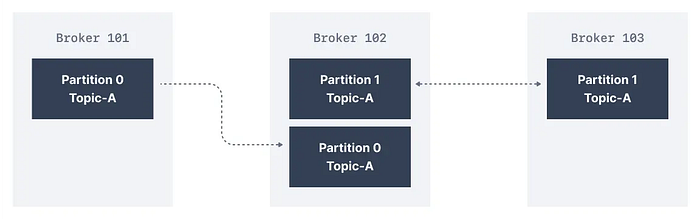

Kafka breaks down topics into partitions. Each partition is a log — a sequence of records ordered by an offset.

Now, to keep this fault-tolerant, Kafka replicates each partition across multiple brokers. The number of copies is called the replication factor.

For example, if a topic has a replication factor of 3, then each partition in that topic is stored on 3 different brokers.

Each partition has:

- One leader replica: handles all reads and writes.

- One or more follower replicas: keep in sync with the leader, ready to take over if it crashes.

Partition P0 with replication factor = 3

+----------+ +----------+ +----------+

| Broker 1 | <- | Broker 2 | <- | Broker 3 |

| Leader | | Follower | | Follower |

+----------+ +----------+ +----------+These followers form the In-Sync Replica (ISR) list — brokers that have the same data as the leader.

How Does Kafka Handle Failures?

When a broker fails, Kafka doesn't panic — it promotes another in-sync follower as the new leader. Once the failed broker comes back online, it rejoins as a follower and begins syncing again.

But not every follower can be promoted. Only followers in the ISR — those that are not lagging behind — are eligible.

Lag Detection

Kafka uses two thresholds to decide if a replica is falling behind:

- Message lag:

replica.lag.max.messages=500If a follower is behind the leader by more than 499 messages, it's removed from ISR.

- Time lag:

replica.lag.time.max.ms=10000If a follower doesn't fetch data from the leader within 10 seconds, it's considered out of sync.

These settings let you fine-tune how tolerant your system is toward slow or flaky replicas.

Real-World Configuration and Performance

In production, replication adds both resilience and overhead.

Let's take a benchmark.

Scenario:

- Topic with 6 partitions

- Replication factor: 3

- 1KB messages

- 50,000 messages/sec

| Setting | Throughput (MB/s) | Latency (ms) | Notes |

| ---------------- | ----------------- | ------------ | ------------------- |

| acks=0 | 150 | <5 | Fastest, least safe |

| acks=1 | 100 | \~10 | Leader-only ack |

| acks=all (ISR=3) | 60 | \~25 | Safe, slowest |Higher acks mean stronger durability but higher latency and lower throughput.

Producer Acknowledgments: acks Setting

The producer can choose how many acknowledgments it wants from Kafka using the acks configuration:

Properties props = new Properties();

props.put("acks", "all"); // Options: 0, 1, allacks=0: fire-and-forget (fast, unreliable)acks=1: wait for leader acknowledgment (safe-ish)acks=all: wait for all in-sync replicas (safest)

Use acks=all for critical data like financial transactions. Use acks=0 if you're sending GPS pings and can tolerate a few losses.

How Kafka Tracks In-Sync Replicas

Behind the scenes, the Kafka leader tracks all follower replicas and monitors whether they're keeping up.

If a replica fails to pull data for too long or lags too much, it's booted out of the ISR list. This ensures that if the leader crashes, the new leader has a consistent copy of data.

Kafka guarantees: If a message is written to all in-sync replicas and the producer gets an acknowledgment, that message won't be lost — even if the leader dies immediately after.

What Happens When All Replicas Are Down?

Let's be honest — this is rare. But if it happens, Kafka offers two options:

- Wait for an in-sync replica to return

- Promote any replica that comes up

This is a classic trade-off:

- Option 1 favors consistency (you only promote replicas with the latest data).

- Option 2 favors availability (you promote whoever shows up first, risking stale data).

Kafka's default behavior is to wait for an in-sync replica, which aligns with its durability guarantees.

Why Do Replicas Lag?

Not all lag is evil. But it helps to know what causes it:

- Slow replicas: The follower is healthy but can't match the leader's speed — could be due to disk IO, network, or CPU.

- Stuck replicas: The follower stops fetching data — could be garbage collection, deadlocks, or just… dead.

Monitoring metrics like UnderReplicatedPartitions, IsrShrinksPerSec, and ReplicaFetcherLag can help detect and prevent these problems early.

Closing Thoughts

Kafka replication is one of the reasons the platform can proudly claim "durable and scalable". But as with any safety net, it comes with costs: performance, complexity, and operational tuning.

If you take away anything from this, let it be this:

- Always replicate your partitions.

- Always monitor your in-sync replicas.

- And don't blindly assume replication means "my data is safe."

Replication in Kafka is beautifully engineered, but it's not magic. It's your job to configure it right, monitor it diligently, and know when to trade speed for safety.