File Transfer in Event-Driven Architectures

When organizations have invested in Apache Kafka for event streaming, building separate infrastructure for file transfers creates operational complexity. Streamsend offers a technical approach: stream files of any size and content type directly through an existing Kafka topic, alongside events. Yes its is a fancy way to send a file — but sometimes it really makes sense.

The Problem: Files Don't Fit in Event Pipelines

Most organizations end up with a hybrid architecture: events flow through Kafka while files travel through separate channels like FTP, rsync, scp, S3 uploads, or other traditional file transfer protocols. Most file-sender utils are decades old: but are deeply baked into how files get moved around.

Kafka has solved the problem of sending transactions (the eneventification of business data) but files, sadly, remain unloved in the streaming world.

Separation of the data plumbing for events and files creates operational complexity, inconsistent retry mechanisms, and missed opportunities for unified data flows.

Consider these scenarios:

- Insurance providers may need to send updated policy PDFs alongside policy update events

- Edge Field teams with poor connectivity need to upload thousands of drone images with reliable retry mechanisms — secure, parallel send- and-forget

- Hi-tech manufacturing : multi-threaded concurrent send of high-volume image capture from production lines to big-data storage

- Retail operators: upload receipts, images, and documents for hundreds of outlets using the same plumbing as event-driven point of sale transactions: the same security, capacity, service agreements & data sovereignty.

Traditional file transfer utilities (by and large) work sequentially, fail without sophisticated retry logic, and operate completely outside the streaming infrastructure.

The Streamsend Approach: Files as Chunked Events

Streamsend implements file transfer as chunked events, making files compatible with Kafka infrastructure. Here's how it works:

Automatic Chunking and Reassembly

Streamsend automatically splits files into message-sized chunks that respect your Kafka cluster's max.message.bytes configuration — which has a default to 1MB. Streaming 1MB files is easy: but unless we are talking about the Derek Zoolander Center for Kids Who Cant Read Good — most files exceed 1MB. A 100MB video file becomes 101 ordered chunks of ~996KB each streamed through your topic partitions and reassembled bit-perfectly at the destination. 996Kb is about 95% of the message size limit; to allow for a message key andmessage headers which are used for re-assembly.

Small files get an efficiency boost through automatic compressed bin-packing — multiple small files are packed into single messages until the size limit is reached, reducing overhead and improving throughput.

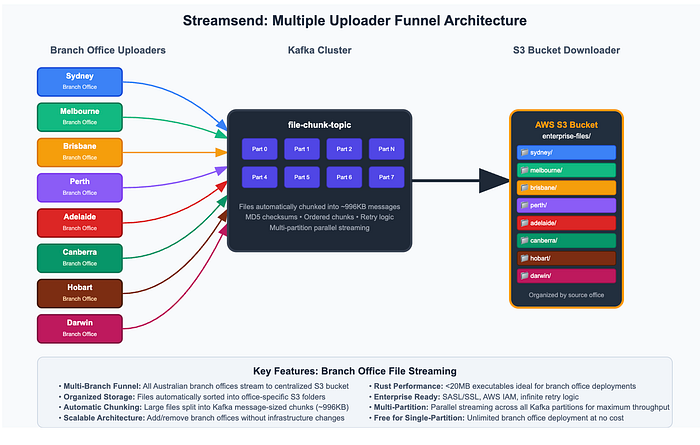

Many-to-One (and Many-to-Many) Architecture

The architecture supports multiple uploaders sending to centralized downloaders:

Expanding a point-to-point file sender pipeline architecture enables:

- Fan-in patterns: Hundreds of edge devices streaming to a single central processor

- Fan-out patterns: Files replicated to multiple destinations simultaneously

- Geographic distribution: Stream files across regions using existing Kafka infrastructure

What kind of files can be streamed?

Files must be readable and static — open a file that with a unique name (or path), write data to the file and then close the file.

The Streamsend Uploader doesnt care what is inside the file: it simply chunks the file along byte boundaries and produces the chunks to the topic. The file contents can be text or binary; encrypted or compressed; any characterset. It can be 0 bytes, 1 byte or tens of gigabytes — the largest file tested is 27GB. It can be a document, image, video, executable, text, archive or any other content that can be saved to a file. Entire directory trees will be automatically bin-packed, reproducing the tree at the Downloader.

It is not for open files which are changing; or for live streaming audio/video media; or for restorable backups that require special file types such as block character files, sparse files, device files, or replication of file ownership and permissions. It is also (currently!) not for data that requires stream processing: there is no capability to associate a schema with a a file chunk.

From Kafka Connect to Rust: Performance Improvements

The Streamsend file-streaming pipeline began as Kafka Connect plugins but has been rewritten in Rust to address deployment and performance requirements.

Why the Rust Rewrite?

Size and Portability: The Rust uploader is a compact executable (~20MB), perfect for enterprise servers, containers or small edge devices.

Performance: Rust's memory management enables handling of larger files — testing has included a 27GB file on a 16GB sender using the accessible swap allocation. No JVM required.

Stateless Operation: 100% in-memory operation means no filesystem dependencies for clustering, enabling true horizontal scaling.

Better Threading: Rust's concurrency model enables efficient multi-threaded operations without garbage collection pauses.

Built for Kafka: Database connections have been made easy: Kafka frequently remains too hard: Streamsend is a relatively rare thing: a client application for Kafka that attempts to automate common pitfalls; including auto detection of message size limits & partition counts; helpful assumptions for missing but derivable configuration properties and sensible automation when failures occur.

Deployment Flexibility

Rust uploaders run natively on:

- Linux AMD64 (command line and Docker)

- macOS (command line)

- Kubernetes using the docker images

The lightweight footprint makes them ideal for constrained environments like manufacturing equipment, point-of-sale systems, and mobile deployments.

Partitioning and Throughput Optimization

Streamsend detects the Kafka topic's partition count and adapts its behavior accordingly:

Free Edition (Single Partition)

- Unlimited deployment of uploaders and downloaders

- Files stream through the first partition of the topic

- Fast enough for most use cases

- Compatible with all Kafka distributions

Pay Edition (Multi-Partition)

- Partition utilization: auto-detection of the partition count so that Uploaders stream file-chunks across all partitions simultaneously. The Downloader automatically starts a consumer thread for each partition and manages correct reassembly of files, with MD5 verification on completion

- Parallel throughput: Utilizes available network interface capacity

- Advanced output sinks: Direct streaming to an S3 bucket instead of a local filesystem

Flexible Output Sink Options

Downloaders consume and merge files to two Output Sink Options

Local Filesystem

Traditional file reconstruction with MD5 verification and subdirectory preservation.

S3 Integration

Stream directly from multiple uploaders to S3 buckets, eliminating intermediate storage requirements. Perfect for cloud-native architectures.

Future Integrations

Planned support for Azure Blob Storage and Google Cloud Storage expands multi-cloud compatibility.

When to Choose File Streaming

File streaming through Kafka makes sense when:

An Event Pipeline Already Exists

"We have Kafka for events, but also some files…" When organizations are already operating Kafka clusters, extending them for file transfer leverages existing infrastructure, monitoring, and operational expertise.

The Coat-Check Pattern Isn't Feasible

Coat-Checking associates a locater to the file; instead of sending the file itself. This works well on cloud platforms but if an object store is not available or practical, streaming files directly through Kafka event infrastructure maintains architectural consistency.

You Need Reliable Retry Logic

Kafka's built-in retry mechanisms and failure handling provide robustness beyond traditional file transfer utilities — send-and-forget for unreliable networks; with no data loss or data duplication.

Scalability Requirements

Multiple uploaders can overwhelm traditional file servers, while Kafka's partition-based architecture scales well with load. Scaling Kafka cluster is becoming easier: peak-time of scaling file-send capacity with event-send capacity has obvious benefits.

Security and Encryption Requirements

Kafka's mature security model provides robust authentication, strong encryption capabilities including flexible cipher configuration, role based access control, audit and observability. Traditional file-senders rarely offer anything comparable.

Broad Compatibility

Streamsend integrates seamlessly with:

- Apache Kafka (including Strimzi and Cloudera distributions)

- Confluent Platform and Confluent Cloud

- AWS MSK (Managed Streaming for Kafka)

- Any Kafka-compatible platform supporting standard producer/consumer APIs

Getting Started

For those interested in implementing file streaming through Kafka infrastructure:

Documentation: Comprehensive guides available at streamsend.io

Free Download: Get unlimited single-partition deployment at Downloads

Community: Technical questions and feedback via GitHub Issues

Contact: For consulting and enterprise deployment support, reach out to markteehan@streamsend.io

In Summary

In 1966 Star Trek introduced a fancy way to send a person using a teleporter, dematerialization and beaming — it was cool but it sometimes had an eyebrow-raising service level that might concern a modern data engineer. But sure — it was a fancy way to send a person.

Streamsend Uploader & Downloader is, ultimately, a fancy way to send a file with an improved tradeoff between drama and survival.

By integrating file transfer with existing Kafka infrastructure and treating files as chunked events, it now enables unified data pipelines — events and files — and leverages existing Kafka operational practices to do more.

Whether you're streaming drone images from remote locations, distributing policy documents alongside events, or building distributed manufacturing data pipelines, Streamsend extends your Kafka cluster to handle file transfer workloads.