Natural Language Processing (NLP) has had a relatively long development period. It is often broken down into smaller problems: text classification, Named Entity Recognition (NER), summarization, etc. to solve concrete challenges.

For each smaller challenge, we have different small models to solve it, and sometimes, we must prepare large enough training data.

For example, to use text classification to detect when a guest asks about check-in time, we need to create a list of similar questions for the intentcheck-inin the following format (using Rasa NLU syntax):

nlu:

- intent: check_in_time

examples: |

- When can I check-in?

- What time am I allowed to check-in?

- Can you tell me the check-in time?

- intent: pool

examples: |

- Is the pool available for guests to use?

- Could you let me know if there is a swimming pool here?

...Then put it into the intent classification model to train.

With lots of intents, this file becomes bigger, takes more time to train, and when we add new intents or training phrases, we must retrain.

With the rise of Large Language Models like ChatGPT, it can tackle NLP problems more easily. With zero-shot prompts, we just need to put the guest's question and list of intents in a prompt without any examples:

- System messages: |

You will classify user's question into one of these intents: check_in_time, pool, check_out_time.

You just return intent name. Don't add any extra word.

- User: Do you have pools?

- Assistant: poolSo we don't need heavy training here. If an intent is detected incorrectly, we just need to add more examples to the prompt (few-shot prompt).

Not only intent classification, but NER, summarization, and other NLP challenges could be implemented easily with only prompt engineering.

So do we no longer need traditional NLP methods? I think for personal projects, the answer is YES! With limited human and computing resources, an indie project should use cloud LLM for NLP processing.

BUT with enterprise projects, building with traditional NLP has advantages in two main aspects: Privacy and Computing resources. Let's see why.

Privacy

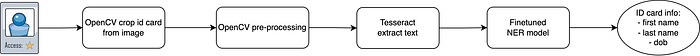

If chatbot training data doesn't make you think more seriously about privacy, let me give another example: At Altitude, we create a comprehensive guest experience platform that empowers guests to seamlessly manage every aspect of their hotel journey — from pre-check-in and check-in to their stay and checkout. During check-in, they need to upload an ID card or driver's license via the app for verification.

Information from an ID card can also be used in downstream tasks like fraud detection. For this, we have a task to extract ID card details into structured information, including first name, last name, date of birth, and address.

ID card information makes you think seriously about data privacy, doesn't it?

If we use cloud-based Multimodal LLMs (MLLMs) like ChatGPT, Gemini, etc., the task becomes relatively easy: upload the image to the cloud, write a prompt, and receive a structured JSON output.

However, once the guest checks out, all images and ID card information must be deleted from our system. While we can ensure this deletion within our system, what happens to the data we uploaded to the MLLM cloud? How can we delete it and ensure it has been completely removed? These are always challenging questions when transferring data externally.

Ideally, we must keep all sensitive data within our system, process it with our algorithms, and retain the ability to delete it anytime.

You might say that LLMs or MLLMs don't have to be cloud-based; we have other options, like self-hosted open-source LLMs such as LLaMA or DeepSeek. Let's move on to the next problem.

Computing Resources

For the option of self-hosting an MLLM, let's take the example of LLaMA 3.2-Vision-11B, the smallest vision model from the LLaMA 3.2 Vision series.

This model has 11 billion parameters, and each parameter is stored using 2 bytes. Therefore, to load all the weights into memory, we need at least 22GB of RAM for CPU inference or 22GB of VRAM for GPU inference.

Of course, using a CPU is not ideal because the compute performance (FLOPs) of a CPU is significantly lower than that of a GPU, and memory bandwidth is also a critical factor. You can learn more about the impact of memory bandwidth in the article A Guide to LLM Inference and Performance.

Assuming that the CPU is powerful enough to handle inference and that the process is memory-bound, the inference speed (with a batch size of 1) would be:

time/token = total number of bytes moved (the model weights) / accelerator memory bandwidth With DDR5 RAM bandwidth at 69 GB/s, the inference speed can be calculated as follows::

(2 * 11B) bytes / (69 GB/s) = 319 ms/token

This means the system can generate 3 tokens per second, which is too slow for a production environment.

If we use the cheapest instance with VRAM larger than 22GB, such as a g5 instance with one A10 GPU (24GB VRAM) and 600 GB/s memory bandwidth, the inference speed improves significantly:

(2 * 11B) bytes / (600 GB/s) = 37 ms/token

This translates to generating 27 tokens per second, making it 9x faster than a CPU with DDR5 RAM.

However, in a production environment where many users might use the system concurrently, a single A10 GPU is insufficient. Upgrading to an instance with 4 A10 GPUs, like g5.12xlarge, costs approximately $4,000 per month. This cost can be prohibitively expensive for most startups and small businesses.

If we approach the problem using a traditional pipeline:

The heaviest models in this pipeline are the LSTM in Tesseract and the NER model, but they are still much smaller than the larger MLLM model (11B parameters).

Assuming we use a BERT model fine-tuned for NER, it only has 110M parameters.

Therefore, these models are suitable for deployment on instances with only CPU and RAM, without requiring a GPU, making them much more cost-effective.

Enhancing Traditional NLP with LLM

So we just rely on traditional NLP techniques and ignore the current hype around LLMs? I believe LLMs can still provide value in certain use cases.

Synthetic data

LLMs are very good at generating data. So, if you have no data for training or want to enrich an existing small dataset for traditional NLP tasks, you can create synthetic data via prompts.

For example, with a bot built using intent classification, we can ask the LLM to generate more questions for each intent.

System message: You are a guest in hotel. What you talk with staff when you want ask about {intent}.

Give {num_expected_example} phrases, each is phrase variations, verbs and nouns in different parts of the sentence.

Use variations and synonyms of keywords in examples {pre_examples}

Your phrases must be short and clear as examples.

Format of response is

[

"phrase 1", "phrase 2"

]Put value to variables intent, num_expected_exampleand pre_examples . Then we have list training phrases for an intent to put to intent classification models.

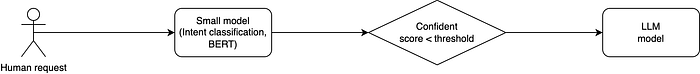

Using LLM as Fallback

Disadvantages of LLMs include high computing resource requirements. However, if we can handle 80% of requests with traditional NLP and route only 20% to LLMs, the cost of computing resources can decrease significantly.

This is particularly effective if we deploy our model to a service that charges based on inference time rather than server running time.

A hybrid method to keep balance between privacy data and acceptable pricing for computing resources:

Conclusion

A popular technique to reduce computing resource requirements for LLMs is quantization. However, this often comes at the cost of reduced model performance and an increased likelihood of hallucinations.

In the future, as LLMs become more compact and efficient while maintaining high performance (with fewer hallucinations), they may gradually replace traditional NLP models. For now, though, smaller language models continue to play a significant role in many commercial projects due to their practicality and resource efficiency.