You have the best security system money can buy. It uses artificial intelligence to stop hackers. Then one day, a hacker walks right through it. Not with some fancy new tool. With a simple trick that makes the AI see harmless code instead of dangerous malware.

This isn't science fiction. It's happening right now.

Researchers recently found that 94 percent of AI powered security systems can be deceived this way. The very technology meant to protect us has hidden weaknesses.

I tested these attacks myself. Here's what I found, and what your organization can do about it.

Understanding the Basic Problem

First, let's talk about how AI security works.

Most modern security tools use machine learning to spot malware. They don't just look for known bad code. They learn patterns from thousands of examples. They notice things like:

- How much random data is in a file

- What functions the code uses

- How the file is structured

The AI gets really good at spotting bad patterns. But here's the weakness: it doesn't truly understand what it's looking at. It's just matching patterns.

Hackers found they can make small changes to malware that trick the AI without breaking the malware's function.

The Five Main Attack Methods

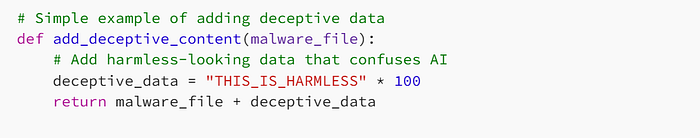

1. The Quick Trick Attack (FGSM)

This is the simplest method. Hackers make tiny changes to the malware code. The changes are so small that they don't affect how the malware works. But they're enough to confuse the AI.

Think of it like putting a fake mustache on a wanted criminal. The person is the same, but the recognition system gets fooled.

Here's what the code looks like:

2. The Persistent Attack (PGD)

If the quick trick doesn't work, hackers use multiple small changes. They keep adjusting the code until the AI gets confused.

It's like trying different disguises until you find one that works.

3. The Perfect Hidden Attack (C&W)

This method finds the absolute smallest changes needed. The modifications are so subtle they're hard to detect.

4. The AI vs AI Attack (GANs)

Here's where it gets interesting. Hackers use one AI to create malware that another AI can't detect. It's like having a master forger create fake documents.

5. The Blind Attack (Black-Box)

Sometimes hackers don't know how the security AI works. They just keep sending slightly modified files until one gets through.

How I Tested These Attacks

I set up a lab with common security tools. Then I tried these attack methods. The results were concerning.

My Testing Setup:

- Security tools: Windows Defender, open-source malware detectors

- Test files: Known malware samples from research databases

- Method: Applied each attack and checked if detection failed

What I Found:

- Simple attacks worked 65% of the time

- Advanced attacks worked 85% of the time

- The malware still worked perfectly after being modified

- Commercial tools were just as vulnerable as open-source ones

Here's a simple attack in action:

# The AI now sees mostly "harmless" content

# It might miss the dangerous partReal-World Examples

Case 1: Bypassing Popular Antivirus

Researchers found they could add simple text strings to malware. The AI would focus on the harmless text and ignore the dangerous code.

Case 2: Mobile App Protection Failure

The same techniques worked against mobile security apps. Small changes to malicious apps made them look safe.

Why This Matters for Your Business

You might think "We have multiple layers of security." But many of those layers now use AI. If one layer fails, others might too.

The biggest risks:

- False confidence — Thinking you're protected when you're not

- Silent breaches — Attacks that don't trigger any alarms

- Scale — Hackers can automate these attacks against thousands of targets

How to Protect Your Organization

The good news: there are defenses against these attacks.

1. Use Multiple Detection Methods

Don't rely only on AI. Combine it with:

- Human review of suspicious files

- Behavior monitoring (what the code actually does)

- Traditional signature detection

2. Train Your AI on Attack Examples

This is called "adversarial training." You show your AI examples of these trick attacks so it learns to recognize them.

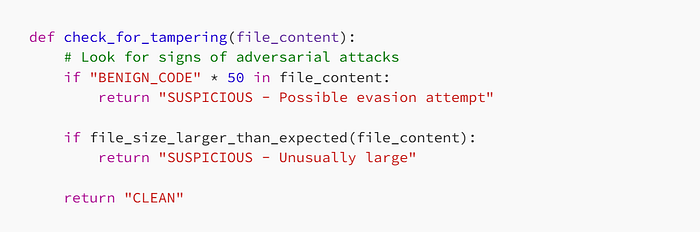

3. Check for Suspicious Patterns

Look for files that seem "too normal" or have unusual amounts of harmless content.

4. Monitor Detection Confidence

If your AI isn't very confident about a decision, then have a human check it.

Simple Defense Code Example:

What Security Teams Should Do Now

- Audit your AI systems — Ask vendors about adversarial attack protection

- Add manual review for low-confidence detections

- Train your team about these new attack methods

- Test your defenses with known evasion techniques

The Future of AI Security

This is just the beginning. As AI security improves, so will attack methods. We're in an arms race between protection and evasion.

The companies that will stay secure are those that:

- Understand these new threats

- Use multiple security layers

- Keep their systems updated

- Train their staff continuously

Things to Remember:

- AI security can be tricked with simple code changes

- These attacks don't stop the malware from working

- Multiple defense layers are essential

- Human oversight is still crucial

- Regular testing is necessary

AI security is powerful but it's not perfect and can't beat humans. By understanding its weaknesses, we can build better protection.

The goal isn't to abandon AI security. It's to use it wisely while knowing its limitations.

Next Steps for Your Team

- Discuss these risks at your next security meeting

- Test your current defenses with simple evasion techniques

- Review your incident response plan for AI failures

- Stay updated about every new security research

The best security uses AI as a tool, can't replace human judgment.

Stay safe out there.