How I Found My First Bug: Step‑by‑Step Moves Every New Bug Hunter Must Know | Guarantee Result

Everyone posts "found $5k with this trick" — here's what they don't show: it wasn't a trick. It was recon. Behind every flashy screenshot are hours of reading JS, hunting certs, and parsing forgotten backups. The grind. The tiny, annoying moves that stack into a payout. Map. Connect. Test. Repeat. Do this every day and you stop seeing lucky posts — you create them.

i know u didnt understood a hell what i said so let me explain it again — this article is a f**king toolbox. Read it once and you'll learn the move. Read it twice and you'll start finding the paths yourself. Read it ten times and you'll check it every morning before you start hunting. That's the kind of muscle this builds.

Join Discord if u can read this:https://discord.gg/rJexj8W7yd

quick truth (read this twice)

Recon is 80% of the work. Not "run a scanner and hope." Collect everything. Connect dots. Think why an asset exists. That's where the vulnerability hides.

If you skim this and go back to automated scans, you'll keep finding the same low-impact stuff. Do the work.

Think of a target as a building. Recon is walking the perimeter, checking windows, reading the delivery labels, and noticing which doors are unlocked. You don't break in yet — you're mapping where someone might leave a window open.

Three core principles:

- Collect — gather every possible clue (domains, JS, certs, repos).

- Connect — join clues to form a likely path (this file + that endpoint + leaked token = path).

- Test carefully — verify that path using minimal queries and safe proofs.

1) build a single source of truth: all-assets.txt

You need one file. One map. Everything flows from here.

I know u didnt understood a hell what i said so let me explain it again: this file saves you time and credibility. When triage opens your report and sees the exact hostname you used, they verify fast. Fast verification = more attention.

subfinder -d target.com -o subfinder.txt

amass enum -d target.com -o amass.txt

cat subfinder.txt amass.txt | sort -u > all-subs.txt

httpx -l all-subs.txt -silent -status-code -o live-subs.txt2) harvest URLs & JS from clients (the server will tell you where to hit)

Clients leak the server map. JS, mobile bundles, archived pages contain API endpoints and param names.

Run:

cat live-subs.txt | waybackurls | tee wayback-urls.txt

gau --subs all-subs.txt > gau-urls.txt

cat wayback-urls.txt gau-urls.txt | sort -u > all-urls.txtThen grab JS and scan for strings:

grep -Eo 'https?://[^ ]+\.js' all-urls.txt | sort -u > js-urls.txt

xargs -a js-urls.txt -n1 curl -s | grep -E 'auth|api|token|internal' | sort -u > interesting-js-snips.txtIf you see /internal/, dev-api, api/v2/internal — stop and focus there.

i know u might think "that's noisy" — yes. But noise hides patterns. Humans see patterns machines miss.

3) certificate transparency (crt.sh) — secret subdomains live here

Marketing forgets certs. Devs forget hostnames. crt.sh shows certs that reveal hidden hostnames like staging.target.com or internal.target.com.

Quick fetch:

curl -s "https://crt.sh/?q=%25.target.com&output=json" | jq -r '.[].name_value' | sed 's/\*\.//g' | sort -u >> all-subs.txt

sort -u all-subs.txt -o all-subs.txt

httpx -l all-subs.txt -silent -o live-subs.txtAdd results back into your pipeline. Don't skip this — it finds targets marketers never wanted you to see.

4) wayback + sitemaps — the deleted pages trick

archived snapshots and sitemaps may show old admin pages or debug endpoints. people delete links but not the actual pages or scripts.

combine wayback outputs with your live-subs.txt and test the archived endpoints. Often the endpoints still function or give useful error messages.

it's like finding an old blueprint of the building that shows a backdoor no one uses now — but the backdoor might still be there.

5) Github & repo leaks — simple wins explained

public repos sometimes contain keys, webhook URLs, or configs. devs accidentally publish secrets or leave tokens in .env.

- Search GitHub:

org:target target.com - Run

gitleaksandtruffleHogon public code related to target.

one leaked .env with a DB password or API token can shortcut a lot of guessing

6) cloud storage & backups — why they matter

S3 / Azure / GCS containers sometimes have public ACLs. backups and logs contain secrets (API keys, DB dumps) and are often forgotten.

- Check references in JS and repos for

s3.amazonaws.comorblob.core.windows.netdomains. - Use

httpxto test bucket paths found.

If a backup contains password or DB_HOST, you found a direct path to impact.

7) Shodan / Censys — seeing exposed services

search engines for internet-connected devices and services. dev/test machines are often left exposed with default settings.

- Find IP ranges via

whoisor passive DNS. - Query Shodan/Censys for those ranges.

- Filter for

staging,dev,test.

8) token hunting — patterns and restraint

searching for leaked tokens and API keys. tokens grant access; they're extremely valuable and dangerous if misused.

- AWS keys:

AKIA[0-9A-Z]{16} - Google keys:

AIza[0-9A-Za-z-_]{35}

9) third-party mapping — why vendors matter

enumerate all external domains and services the target uses (analytics, payroll, HR, partners).

the vendor often has weaker controls and can be the pivot point.extract external domains from live site HTML, JS, sitemaps, and repos.

Test them for weak configs and keys. instead of breaking into a fortress, sometimes you walk through the vendor's backdoor.

10) automation pipeline — what to automate and why

Goal: automate grunt work so your brain can focus on pattern recognition.

Baseline pipeline (and why each step exists):

amass + subfinder + crt.sh→all-subs.txt(collect assets)httpx→live-subs.txt(see which assets respond)waybackurls + gau→all-urls.txt(historical and known URLs)- JS parsing →

endpoints.txt(client reveals server) gitleaks→secrets.txt(find accidental leaks)nuclei→ quick checks (low-effort vuln surface)- Manual triage → human verify and PoC

deep example — step-by-step with why (one path from clue → bug)

- Clue:

internal.api.target.comappears in JS. (Why this matters: client references an internal API.) - Action: check with

httpx— host responds with401 Unauthorizedand headerX-Env: staging. (Why: it indicates an auth flow and staging environment.) - Next: search repos for

internal.api.target.com— find adocker-compose.ymlwithENV=devand a link to a test S3 bucket. (Why: repo gives config hints.) - Next: test S3 bucket — it lists

backup.sql. (Why: backup may contain tokens.) - Result: backup shows an API key for a third-party vendor used by staging. Use minimal validation to show the key is valid (e.g., token metadata endpoint). (Why: proof without exfil.)

- Impact: staging has credentials tied to an internal API — triage can fix ACLs or revoke token.

small clue in JS → repo leak → cloud leak → validated path to impact. Each step is a logical link, not random guessing.

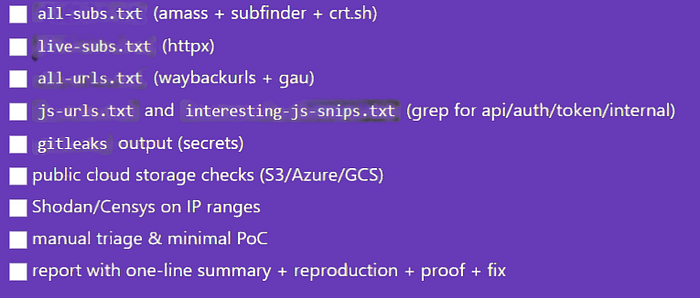

ready-to-use checklist (print this)

Do this pipeline every day for a week on cheap targets or CTFs. You're not memorizing commands — you're training your pattern recognition. After two weeks you'll start seeing the same naming conventions and deployment mistakes. That's when you stop hunting randomly and start following repeatable paths to real bugs.

Stop reading this and open a terminal. Run the all-assets.txt pipeline on a target you're allowed to test. Find one weird host. Ask: why is it there? That question is your ticket.

till then

keep hunting