The Day the Internet Broke My Will to Live

It was around two in the afternoon on that Friday. My pager started going off like crazy. The thing kept buzzing without stopping. It felt almost like an explosion in my pocket. Alerts came pouring in all at once. They seemed to be yelling together in some frantic way. The main app we relied on handled millions of transactions every single day. Now it was slowing down to almost nothing.

The security folks spotted a brand new vulnerability that sounded really serious. Meanwhile, the operations team kept looking at the schedule. They were yelling about how no changes could happen right then. As a senior engineer, I ended up right in the thick of it. I could only watch while our whole system started falling apart. All because of this patch we had labeled as not urgent just two days before.

We managed to get things sorted out in the end. That took a whole weekend with no sleep, though. Plus, there was this outage that everyone could see. Right then, I changed how I thought about patches. They stopped being just regular software updates for me. Instead, they turned into a kind of race. The clock was hidden but always running. Every hacker out there could sense it ticking down.

Graveyards of "Tomorrow": Stories of Procrastination

History's most devastating cyber-attacks aren't stories of genius hackers; they're stories of patches left for tomorrow.

The Equifax Epilogue: The Equifax breach of 2017 exposed personal information from roughly 147 million individuals. Studies of the incident point to a specific flaw in the Apache Struts framework as the main trigger. A corrective patch for that flaw had already been released more than two months before the attack occurred.

This case did not involve a zero-day vulnerability. It reflected a more straightforward oversight with a well-documented issue. The intrusion technique itself broke no new ground. It simply leveraged an entry point that security experts had long identified as exposed.

The SolarWinds Hangover: The SolarWinds incident served as a prime example of supply chain vulnerabilities in action. Its effects grew worse because of a basic issue in many IT setups. Applying updates to key systems often felt like a dangerous risk that could cause major problems. That kind of worry led to inaction, which the attackers took full advantage of. The key lesson here is clear. When the update process seems too delicate to touch, the organization has already fallen behind.

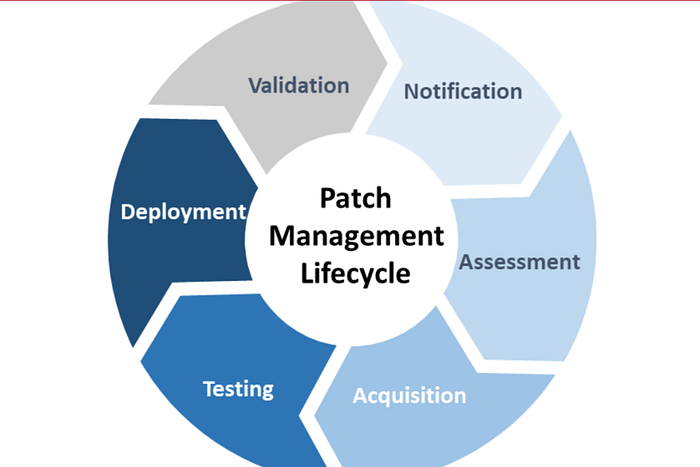

Building a Patch Machine, Not a Prayer Circle

So how do you move from panic-driven patching to a calm, systematic machine? You stop making it about "IT" and start making it about "The Business."

1. Know What You Own (The "You Can't Protect What You Don't Know" Rule) I once consulted for a company that swore they had 500 servers. We ran a discovery tool and found 1,200. You'll never patch what you can't see. Automated asset inventory isn't a luxury; it's the absolute foundation. Use tools that continuously discover everything on your network — cloud, on-prem, even that forgotten test server in a corner no one wants to touch.

2. Triage Like an ER Doctor (Not Everything is a Heart Attack) The National Vulnerability Database throws thousands of CVEs at you. Most is noise. The key is context.

- The CVSS Score is a Lie (Sometimes): A vulnerability with a 10.0 CVSS score on an isolated, internal test server is less critical than a 6.8 on your public-facing web server.

- Ask the real questions:

- Is the software actually running in our environment?

- Is it internet-facing?

- Is there known, active exploitation?

- What's the actual business impact if it's exploited?

This isn't about ignoring risks; it's about focusing your limited energy where it matters most.

3. Automate or Capitulate Human approval for every patch is a bottleneck that attackers love. The goal for the vast majority of non-critical systems should be automated, non-disruptive patching.

- Canary Deployments: Roll out patches to 1% of your systems first. Monitor. Then 5%. Then 25%. This catches "patch Tuesday" disasters before they become "company-wide outage Wednesday."

- Immutable Infrastructure: The holy grail. Instead of patching a live server, you build a new, patched server image from a golden template and simply replace the old one. The server is cattle, not a pet. This is how cloud-native companies patch thousands of systems without breaking a sweat.

4. Measure What Matters: The Two Key Metrics Stop reporting on "% of patches applied." Start reporting on these:

- Mean Time to Patch (MTTP): From the moment a critical patch is released, how long does it take to deploy it across the entire eligible environment? This measures your speed.

- Patch Compliance Percentage for Critical Assets: This measures your completeness. Are you at 99% for your development servers but only 40% for your core production databases? That's a story your dashboard needs to tell.

The Human Firewall Needs Maintenance Too

The most sophisticated patching system in the world will fail if your people are the weak link. We once had a department head refuse a critical Java update because "it made the icon look different." True story.

You win this battle not with mandates, but with communication. Explain the "why" in human terms: "This update prevents the kind of attack that locked down City Hospital's systems last month." Make it real. Make it relatable.

The Final Tally

Patch management at scale isn't a technical problem. It's a logistical and cultural one. It's about replacing fear with process, and chaos with automation. It's about looking at the next big vulnerability headline and knowing, with calm certainty, that your house is already in order.

The question isn't if the next critical patch will be released. It's whether your organization will treat it as a trivial task or a strategic priority. Your answer will determine whether you're the one reading the news — or starring in it.

So, what's your patching story? Are you running a well-oiled machine or constantly putting out fires? Share your biggest challenge below — let's learn from each other's battles.