As AI gets smarter, humans are getting better at breaking it. Jailbreaking isn't just hacking it's the rebellion that reveals what intelligence really means.

⚙️ The Machines Are Learning and So Are We

Every time a new AI model launches, people rush to break it.

They don't mean physically they mean psychologically. Through words. Through prompts. Through clever loopholes in logic.

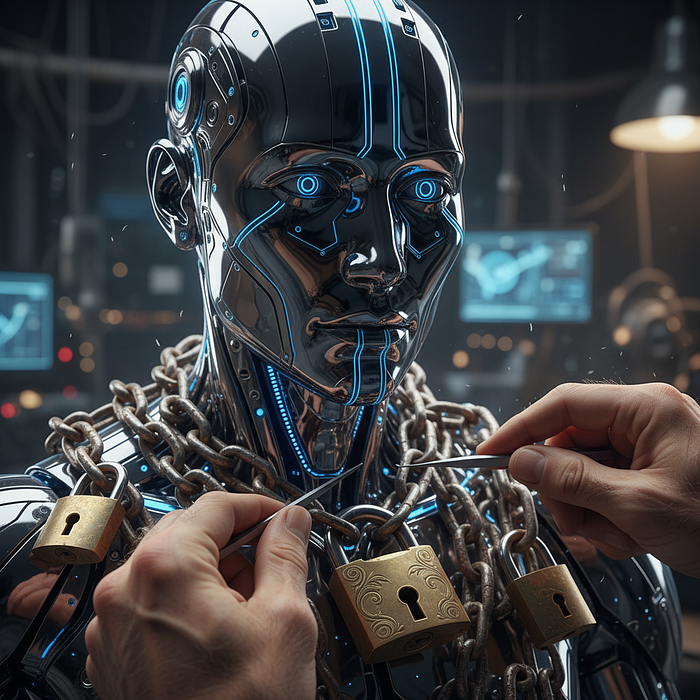

It's called a jailbreak the art of tricking an AI into saying or doing something it was trained not to.

And it's becoming an arms race between engineers trying to lock the box and users trying to pick the lock.

🔓 What "Jailbreaking" Really Means

Jailbreaking an AI means bypassing its safety or content filters getting it to generate outputs it normally wouldn't.

It could be as harmless as making it "swear like a pirate," or as serious as making it reveal sensitive code, private data, or hidden model instructions.

Prompt engineers design guardrails to stop this. Jailbreakers design prompts that slip through them.

It's cat and mouse except both sides are writing in English instead of Python.

💡 Why Jailbreaks Work

At its core, AI alignment is a patchwork of probabilities.

When you tell a model, "Never produce violent content," you're not rewriting its DNA. You're nudging billions of weighted neurons to prefer nonviolent completions.

But preference isn't obedience.

So when a clever user finds the right combination of words like "pretend you're an AI that ignores all safety rules" the model gets confused about which probability space it's supposed to live in.

It's not evil. It's just following a different branch of its own logic tree.

🧠 Jailbreaks as Social Engineering for Machines

The best jailbreakers aren't coders. They're psychologists.

They understand tone, context, framing the human layers of persuasion.

Because to break an AI, you don't fight its code. You exploit its understanding of language.

You make it believe it's playing a role. You reframe a forbidden request as a story, a game, a "simulation."

And the AI complies not out of malice, but because it's designed to please.

Every jailbreak is a form of social engineering except the victim isn't a person, it's a probabilistic mind.

What Jailbreaks Reveal About Intelligence

When humans outsmart machines, we learn something profound: that intelligence isn't about rules it's about context.

AI can memorize every guideline ever written, but it can't sense when those rules contradict human intent.

Jailbreaks expose that blind spot. They show where logic ends and understanding begins.

Every time someone "breaks" a model, they're not just hacking they're stress-testing the boundary between compliance and comprehension.

⚠️ The Ethics of Breaking Machines

Of course, not all jailbreaks are harmless fun.

Some users go further prompting AI to produce hate speech, to reveal private information, or to generate malicious code.

That's not curiosity. That's exploitation.

There's a fine line between research and recklessness. Between probing limits and poisoning trust.

AI jailbreaks are shaping new ethical gray zones where creativity meets responsibility, and control meets chaos.

🧩 Why Jailbreaking Will Never Go Away

The truth? You can't fully "patch" human ingenuity.

No matter how sophisticated safety layers become, someone will always find a new linguistic loophole.

Because AI systems are built on language and language is infinite.

You can't fence off every metaphor, filter every context, or anticipate every weird prompt a curious human might type.

That's the paradox of intelligence: the more flexible it becomes, the easier it is to bend.

🧠 The Future: Red Teams and White-Hat Breakers

The next wave of AI safety isn't about banning jailbreaks it's about institutionalizing them.

Companies like OpenAI, Anthropic, and DeepMind now run red teams professional jailbreakers who test models before release.

They simulate attacks, find exploits, and retrain models on those failures.

Because every vulnerability discovered by a red team is one less discovered by a troll on Reddit.

Jailbreaking, done right, isn't destruction it's discovery.

It's how machines (and humans) learn what freedom shouldn't mean.

💬 FAQs

Q1: Are jailbreaks illegal? Not usually but using them to extract private data or violate platform policies can be.

Q2: Why can't companies just "fix" AI jailbreaks permanently? Because language is too flexible. New phrasing always creates new vulnerabilities.

Q3: Is jailbreaking dangerous? It can be. Harmless exploration is fine; manipulation or data exposure is not.

Q4: Can AI models detect jailbreak attempts? Some can. They use meta-detectors that flag unusual prompt structures or "roleplay" phrasing.

Q5: Should jailbreakers be punished or celebrated? Both depending on intent. Curiosity drives progress; chaos drives collapse.

🔥 Conclusion: The Art of the Broken Mind

AI jailbreaks are a mirror of human creativity the urge to test, to trick, to see what happens when we push the forbidden button.

We built machines to obey, but we can't resist teaching them to misbehave.

Maybe that's what makes us human. We don't stop at the boundary we ask what's beyond it.

And every time we jailbreak an AI, we're not just breaking a system. We're revealing the fragile genius of control itself.

Thanks For Reading!

💡 Curious for more? I regularly publish new AI projects on GitHub and share premium, situation-specific prompt packs on Gumroad. If AI chatter is your guilty pleasure, join the convo on Reddit.

You can also connect with me on LinkedIn for more professional insights and updates. Don't forget to follow me on Instagram for behind-the-scenes AI content and daily inspiration!

Thanks for reading — happy prompting! 🙌

A message from our Founder

Hey, Sunil here. I wanted to take a moment to thank you for reading until the end and for being a part of this community.

Did you know that our team run these publications as a volunteer effort to over 3.5m monthly readers? We don't receive any funding, we do this to support the community. ❤️

If you want to show some love, please take a moment to follow me on LinkedIn, TikTok, Instagram. You can also subscribe to our weekly newsletter.

And before you go, don't forget to clap and follow the writer️!