As bug bounty hunters, we're often trained to look for the obvious: broken access controls, missing authentication, predictable IDs. But sometimes, the real vulnerabilities live in the subtle details — edge cases, timing quirks, and logic that only breaks when you approach it from just the right angle.

While testing one application, I noticed something strange. A particular endpoint accepted an ID as a parameter. The requests always returned a 200 OK, but depending on the ID, the page content would simply say "Access Denied." No errors, no redirects, just a quiet rejection buried in a successful HTTP response.

At first glance, it looked like proper access control was in place. But something about the consistency of those 200s caught my attention.

So I decided to dig deeper.

1. Dead End?

While exploring the application, I stumbled across a fairly standard-looking endpoint:

/Terminal.aspx?id=10892The page loaded successfully, returning a 200 OK along with some company-specific data. That got my attention. I tried changing the id parameter to nearby values like 10891, 10893, and so on. It was the usual manual ID iteration to test for IDORs or broken access control.

But every request came back the same way. The response still returned 200 OK, but the page content displayed a simple message: "Access Denied." There were no error codes, no stack traces, and no signs of unhandled logic. Just a clean denial embedded in a normal response.

At that point, it looked like the application had decent access control in place. Or at least, it wasn't exposing anything interesting.

So I saved this endpoint with the interesting parameter to my notes and moved on.

2. Access Denied Everywhere

After saving the endpoint for later, I eventually circled back to it during a more thorough recon phase. This time, I fired up Burp Suite Intruder to automate some of the testing. Rather than launching a full, high-volume scan, I started cautiously. My goal was to avoid flooding the server or create heavy HTTP traffic to the web server.

I configured a simple payload list with a handful of nearby IDs and let the scan run slowly. Each request came back with a 200 OK response, which initially looked promising. But as I reviewed the responses, they all said the same thing — "Access Denied."

At first, I assumed this was just proper access control doing its job. Either that, or I was hitting IDs that didn't exist. There was also the possibility that the application was returning a neutral error message for both unauthorized and invalid IDs, which would be a smart move from a security perspective.

Still, something about the consistency of those 200 responses stuck in my mind. No variation in status codes, no change in response times, and no hints about whether the IDs were valid but restricted, or simply nonexistent.

The endpoint still wasn't giving up anything useful, but I wasn't ready to cross it off the list just yet.

3. One Request to Rule Them All (After 49 Failed Ones)

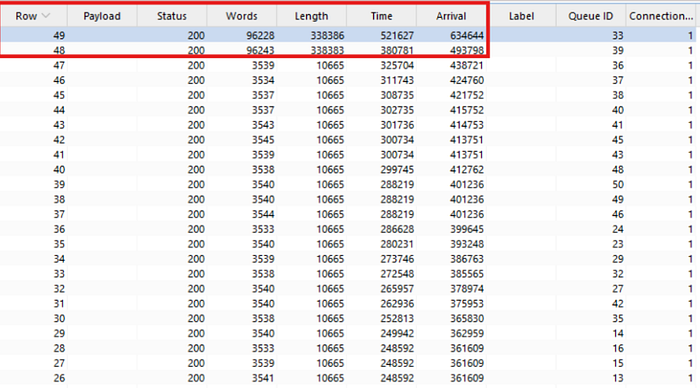

Still curious about the /Terminal.aspx endpoint, I decided to run a broader Intruder scan with a larger set of IDs. This time, I added a response length filter to help surface anything unusual among the sea of "Access Denied" messages.

That's when something strange happened.

Among the hundreds of responses, a few stood out. Their response lengths were significantly larger than the rest. It was just enough to make me stop and take a closer look. These responses looked like they contained actual data, not just rejection messages.

Excited, I tried sending the exact same requests manually after the scan completed. But to my surprise, I couldn't reproduce the result. Each request now returned the standard "Access Denied" message again, with the same uniform response length as before.

That inconsistency was the moment I knew something weird was going on.

I started to wonder if timing might be a factor. Maybe the server wasn't always applying access control checks consistently under load. To test that theory, I moved over to Turbo Intruder, a powerful Burp Suite extension designed for high-speed, concurrent requests.

I crafted a simple script that sent 50 requests to the same ID, all in rapid succession. I created a custom script for this.

def queueRequests(target, wordlists):

# if the target supports HTTP/2, use engine=Engine.BURP2 to trigger the single-packet attack

# if they only support HTTP/1, use Engine.THREADED or Engine.BURP instead

# for more information, check out https://portswigger.net/research/smashing-the-state-machine

engine = RequestEngine(endpoint=target.endpoint,

concurrentConnections=1,

engine=Engine.BURP2

)

# the 'gate' argument withholds part of each request until openGate is invoked

# if you see a negative timestamp, the server responded before the request was complete

for i in range(50):

engine.queue(target.req, gate='race1')

# once every 'race1' tagged request has been queued

# invoke engine.openGate() to send them in sync

engine.openGate('race1')

def handleResponse(req, interesting):

table.add(req)I fired up the Turbo Intruder scan and that's when the bug revealed itself.

Out of those 50 requests, most were denied. But one or two slipped through with full access to the restricted content. The access control logic had failed under the pressure. It became clear that this was a race condition, where the application broke down when handling multiple requests simultaneously.

In effect, sending enough traffic in a short burst caused the system to skip or misapply authorization checks — allowing unauthorized access to sensitive data that would normally be blocked.

As a result of this race condition issue, I was able to access terminal data that belonged to other companies; something that should have been strictly restricted by proper access controls.

The race was on, and the server was losing.

4. Tuning Your Race Condition Radar

Race conditions can be some of the most subtle and frustrating bugs to hunt down. They often don't show up on the first try, or even the tenth. But when they do, the impact can be serious — especially when they affect access control logic like in this case.

Here are a few takeaways and tips from my experience that might help others catch similar bugs:

- Don't stop at a single request Just because one request returns "Access Denied" doesn't mean the logic is flawless. If you're suspicious, try hammering the same parameter under different timing conditions. The bug might only appear under concurrency or load.

- Test with multiple IDs, not just one Even if you get no result on one ID, try running your race condition logic across a broader range. Some IDs might hit different back-end systems or trigger different code paths.

- Use Turbo Intruder for concurrency testing Tools like Burp Intruder are great, but Turbo Intruder gives you the speed and flexibility needed for true concurrency testing. When dealing with race conditions, milliseconds matter.

- Pay attention to response length and timing If the status code stays the same, look at response size and content. Slight variations can hint at deeper problems.

- If something feels off, it probably is This bug was hidden behind a sea of 200 OKs and "Access Denied" messages. But subtle inconsistencies were enough to justify a deeper look. Always trust your gut.

Race conditions can be rare, but when they do exist, they tend to be high-impact. So the next time an endpoint looks boring or shut down, try poking it under pressure. You might just catch it slipping.

Bug hunting isn't just about tools or techniques. It's about paying attention when something doesn't feel right and chasing that instinct until the app gives up its secrets.