Peer review is an activity in which people other than the author of a deliverable examine it for defects and improvement opportunities. Peer reviews are a powerful quality aid in any technical discipline, particularly software and hardware engineering. After experiencing the benefits of software peer reviews for decades, I would never work in a team that didn't perform them.

Many organizations struggle to implement an effective peer review program. The barriers to successful reviews often are social and cultural in nature, not technical. My book Peer Reviews in Software: A Practical Guide describes the nuts and bolts of how to perform reviews. This article explores some of those soft-side aspects of having people look over each other's work. These suggestions might help your peer review program succeed where others have failed.

Scratch Each Other's Back

Asking your colleagues to point out errors in your work is a learned — not instinctive — behavior. We all take pride in the work we do and the products we create. We don't like to admit that we make mistakes, we don't realize how many we make, and we don't like other people to find them. Holding successful peer reviews requires each of us to overcome this natural resistance to outside critique of the things we create.

Busy practitioners are sometimes reluctant to spend time examining a colleague's work. They might be leery of a co-worker who asks for a review of his code. Questions arise: Does he lack confidence? Does he want you to do his thinking for him? "Anyone who needs their code reviewed shouldn't be getting paid as a software developer," a developer once told me. These resisters don't appreciate the value that multiple pairs of eyes can add.

In a healthy software culture, team members engage their peers to improve the quality of their work and increase their productivity. They understand that time spent looking at a colleague's deliverable isn't time wasted, especially when other team members reciprocate. I've learned something from every review I've participated in, as either an author or a reviewer.

Gerald Weinberg introduced the concept of "egoless programming" in 1971. Weinberg recognized that people tie much of their perceived self-worth to their work. Hence, you can interpret a fault someone finds in your work as a shortcoming in yourself as a software developer, perhaps even as a human being. To guard your ego, you don't want to know about all the errors you've made, and you might rationalize possible bugs away. Ego protection can lead to an attitude of private ownership of individual contributions within a team project and poses an obstacle to effective peer review.

Note that the term is "egoless programming," not "egoless programmer." Software professionals need enough ego to trust and defend their work, but not so much that they reject suggestions for improvement. Similarly, the egoless reviewer should have compassion and sensitivity for his colleagues, if only because they will switch roles one day.

Members of a quality-focused software engineering culture recognize that team success depends on helping each other do the best job possible. They prefer to have peers — not customers — find defects. They understand that reviews are not meant to identify scapegoats for quality problems. I regard having a colleague spot one of my errors as a "good catch," not a personal failing.

We Don't Need No Stinkin' Reviews!

What if your team members don't want to hold peer reviews? Lack of knowledge about reviews, cultural issues, and simple resistance to change contribute to the underuse of reviews. Many people don't understand what peer reviews are, why they're valuable, the differences between informal and formal reviews (aka inspections), or when and how to perform them. Some people think their project isn't large enough or critical enough to need reviews, yet any piece of work can benefit from an outside perspective.

Some people object that reviews take too much time and slow the project down. But peer reviews don't slow you down — defects slow you down! Reviews only slow you down if they aren't effective at finding defects, if there aren't any defects to find, or if you don't fix the ones you do find.

Authors who submit their work for scrutiny might feel as through their privacy is being invaded, being forced to air the internals of their work for all to see. The culture must emphasize the value of reviews as a collaborative, nonjudgmental tool for improved quality and productivity. We have to overcome the ingrained culture of individual achievement and embrace the value of collaboration.

People who don't want to do reviews will protest that reviews don't fit their culture, needs, or time constraints. There's the attitude that work done by certain people (usually the speaker) does not need reviewing. Some team members can't be bothered to look at a colleague's work. They protest, "I'm too busy fixing my own bugs to waste time finding someone else's. Aren't we all supposed to be doing our own work correctly?" These excuses could reflect the team's cultural attitudes toward quality, indicate resistance to change, or reveal a fear of how peer reviews might be used to harm individuals.

Strategy: Overcoming Resistance to Reviews

Lack of knowledge is easy to correct if people are willing to learn. A one-day class can give team members a common understanding about the process. Management attendance in the class the class sends a powerful signal to the team: "This is important enough for me to spend time on it, so it should be important to you too."

As a leader, you must understand your team's culture and how best to steer the team members toward improved software engineering practices. Is there a shared understanding of — and true commitment to — quality? What previous change initiatives have succeeded and why? Which have struggled and why? Who are the opinion leaders in the group, and what are their attitudes toward reviews?

Whenever you ask people to work in some new way, their immediate response is to ask, "What's in it for me?" This is an understandable reaction, but it's the wrong question. The right question is, "What's in it for us?" I might not get two hours of benefit from spending two hours reviewing someone else's code. However, the other developer might avoid ten hours of debugging effort later in the project, and we might ship the product sooner. I find this collective, group benefit a compelling rationale for taking quality-oriented actions.

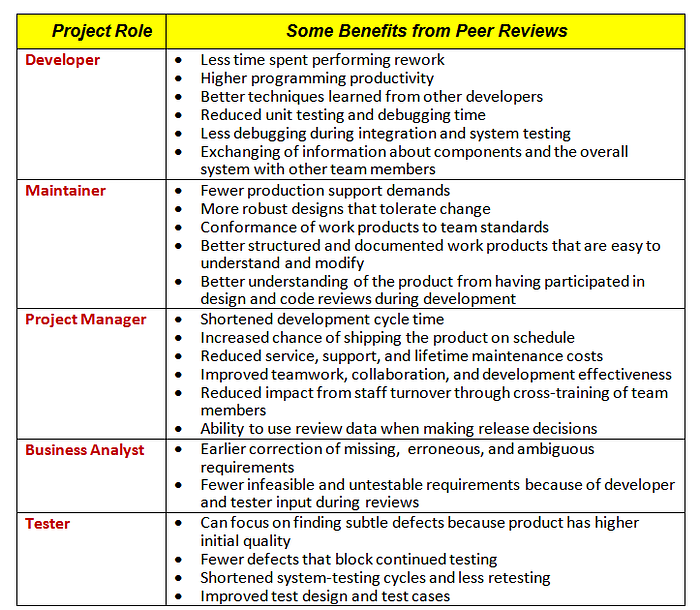

Table 1 lists some benefits various software project team members might reap from reviewing major life cycle deliverables. Customers also come out ahead, with a timely product that is more robust and reliable and better meets their needs.

Influential resisters who come to appreciate the value of reviews might persuade other team members to try them. A quality manager once encountered a developer named Judy who was opposed to what she called "time-sapping" reviews. After participating under protest, Judy quickly saw the power of the technique and became the group's leading convert. Since Judy had some influence with her peers, she helped turn developer resistance toward reviews into acceptance.

Tips for Reviewers

The dynamics between the reviewers and the work product's author are a critical aspect of peer reviews. The author must trust and respect the reviewers enough to be receptive to their comments. Conversely; reviewers must respect the author's ability and hard work.

Reviewers should thoughtfully select the words they use to raise an issue. Saying, "I didn't see where these variables were initialized" might elicit a constructive response; the more accusatory "You didn't initialize these variables" could get the author's hackles up. You might phrase your observation in the form of a question: "Does another component already provide that service?" Or, identify a point of confusion: "I didn't see where this memory block is deallocated."

The word "you" rarely belongs in review input. Direct your comments to the work product, not to the author. Say "This document is missing Section 3.5 from the template" instead of "You left out section 3.5." Reviewers and authors must work together outside the reviews, so maintain a level of professionalism and mutual respect to avoid strained relationships. Reviews shouldn't create authors who look forward to retaliating against their tormentors. Revenge isn't a good motivation for participating in peer reviews.

When a draft of my Peer Reviews in Software book was undergoing peer review (naturally!), one remote reviewer wrote, "How in the world have you managed to" miss some point he thought was important. Then he added, "Good grief, Karl." I respected this reviewer's experience and valued his insights, but he could have phrased that input more thoughtfully. (I actually had addressed his point, but he didn't spot it.) Fortunately, I wasn't offended, and it made a good story for the next chapter.

An author who walks out of a review meeting feeling personally attacked or professionally insulted won't voluntarily submit his work for review again. Bugs are the bad guys in a review, not the author or the reviewers. Create a culture of constructive criticism, where team members seek to learn from their peers and do better work the next time.

Criminalizing Bugs

My colleague Phil once told me that his manager demanded code reviews whenever a project was in trouble, with the unstated objective of finding someone to blame. Phil was the first victim on one project. He said the review team found only minor issues, but the manager then went around complaining that the project was late because Phil's code was full of bugs! Understandably, Phil soon left that toxic company. Singling out certain developers for the "punishment" of having their work reviewed is a sure way to kill a review culture.

I recently heard from a quality manager at a company that had operated a successful review program for two years. Then the development manager announced that finding more than five bugs during a review would count against the author at performance evaluation time. This made the development team members very nervous. It conveyed the dangerous impression that the point of the review was to punish people for making mistakes. It criminalizes the mistakes that we all make and pits team members against each other.

Misapplying review outcomes like this could lead to numerous dysfunctional outcomes:

- Developers might not submit their work for review to avoid being penalized for their results.

- Team members might refuse to review a peer's work to avoid contributing to someone else's punishment.

- Reviewers might not point out defects during the review, instead reporting them to the author offline so they aren't tallied against the author. This undermines the open focus on quality that should characterize peer review.

- Review teams might endlessly debate whether something really is a defect, because defects count against the author while issues or simple questions do not.

- Authors might review very small pieces of work so they don't find more than five bugs in any single review. This leads to inefficient and time-wasting reviews.

- The team's review culture will develop an unstated goal of finding few defects, rather than revealing as many as possible.

Managers can legitimately expect team members to submit their work for review and to review deliverables that others create. However, managers must not evaluate individuals based on the number of defects found during those reviews. Any manager who's worth his salt knows who the good performers are on a team and who's struggling, without this kind of detailed data.

Reviews without Borders

Software projects often involve teams that collaborate across multiple time zones, continents, organizational and national cultures, and native languages. Peer review challenges in those cases include both communication logistics and cultural factors.

Different cultures have different attitudes toward critiquing a colleague's work. People from certain nations or geographical regions tend to avoid confrontational or critical discussions. A colleague once tried to get a peer review program going at her large company in Minneapolis, Minnesota. A bit frustrated, she told me, "People came to the review meetings, but they're all so 'Minnesota nice' that nobody wanted to say anything negative about someone else's work. So no one pointed out any bugs they had spotted." Being considerate of others' feelings is great, but problems do need to be acknowledged and addressed.

If you face a multicultural challenge, learn about ways to get members of different cultures to collaborate and adjust your expectations about peer reviews. Discuss these sensitive issues with review participants so everyone understands how their differences will affect the review process. If the participants are geographically separated, try to hold an initial face-to-face training session before starting reviews. There you can address the cultural and communication factors and help the team members learn how to function when they're apart.

Management Commitment

Management's attitudes and behaviors powerfully affect how well reviews will work in an organization. Management commitment goes far beyond providing verbal support or giving team members permission to hold reviews. You don't need their permission: you're a professional, trusted to do your work the best way you know how.

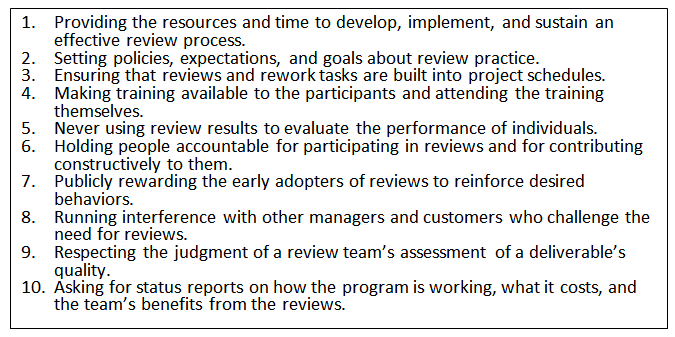

Figure 1 lists several clear signs of management commitment to peer reviews. If too many of these indicators are missing in your organization, your review program will likely struggle.

Review Your Way to Success

If you're serious about software quality, you'll accept that you make mistakes, ask your colleagues to help you find them, and willingly review their work products. You'll set aside your ego so you can benefit from the experience and perspective of your associates. Once you've internalized the benefits of peer reviews, you won't feel comfortable unless someone else examines any significant deliverable you create.

I think that's a good place to reach.

This article is adapted from Peer Reviews in Software by Karl Wiegers. Karl is the Principal Consultant at Process Impact. His latest book is Software Requirements Essentials (with Candase Hokanson). He's also the author of Software Requirements (with Joy Beatty), The Thoughtless Design of Everyday Things, Successful Business Analysis Consulting, and numerous other books.