THE ONE-MINUTE GEOGRAPHER

Yes, I use LLMs (Large Language Models) often to do research on my geography posts for the One-Minute Geographer and other posts.—Now that I've said that, will Medium World kick me out? —No, I never use LLMs to do any writing.

If Medium ever accused me of using LLMs to write posts I think I would just hang it up right there and move on to other things. I would ask myself, "Is my writing so insipid that someone confuses it with the prose churned out by LLMs?."

Some errors are insidious because they are subtle, and you are lucky if you catch them.

I'm writing this article to caution readers about the number and types of errors various search programs produce in response to scientific research questions. This article will show recent examples of errors and types of errors caused by LLMs so you can also beware as you ask questions on the web. You may think "I don't use LLMs." Well, you do as soon as you ask a question on the web because Google and all the other search engines use LLMs in some way now.

I'll start with an example of what I will call a "garbage in, garbage out" error: misinformation pulled off the web. Where else can these LLMs get information from? Note that in this article, ChatGPT refers to the ChatGPT-4 web-based model.

I wrote a book about Portuguese Americans and I post occasionally on a Medium publication called Portugal Calling. A recent post was about Portuguese Americans in Provincetown, Massachusetts at the tip of Cape Cod. There is a famous Portuguese American author, John Dos Passos, who was good friends with Ernest Hemingway and they often socialized in Provincetown. I was curious where in town they lived and perhaps I could include pictures of their houses in the post. Here is what ChatGPT produced in response to a query about where the two authors lived in Provincetown:

"Ernest Hemingway lived in Provincetown, Massachusetts, on Cape Cod for 18 years, settling there in 1929 with his first wife, Katy, at 571 Commercial Street, right on the harbor.

"John Dos Passos lived at 571 Commercial Street in Provincetown, Massachusetts, which was a Cape Cod house he and his wife, Katharine Smith, purchased in 1929."

Note the two identical addresses which aroused suspicion. (If the addresses were different, I probably would have simply accepted the information as correct.) The reality is that on the web, bits and pieces of information about the two authors are mixed up and all the information above related to Hemingway is wrong. Katy and Katherine Smith are the same person. Was she married to both men at the same time? No; Katy was a childhood friend of Hemingway but never married to him. Hemingway introduced her to Dos Passos and eventually she married Dos Passos. Hemingway was a frequent visitor to the Dos Passos home in Provincetown but he never owned a home in that town, so that is incorrect also. "Garbage in, garbage out."

Some errors are insidious because they are subtle and you are lucky if you catch them.

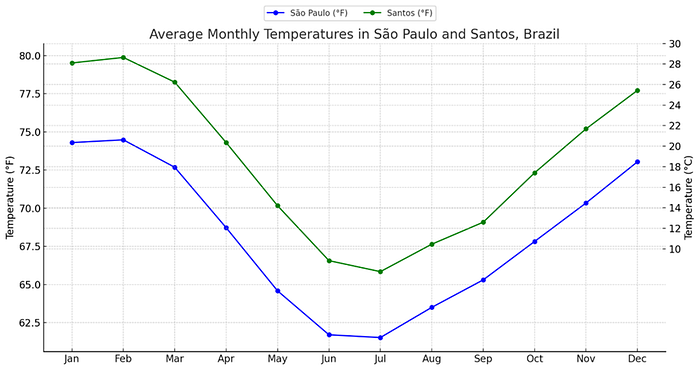

I wrote an article for The Quantastic Journal a few weeks ago about the locations of South American cities and their seaports and differences in elevation and average temperatures at the city and the port. I asked ChatGPT to make a graph of average monthly temperatures in São Paulo and Santos, Brazil. Here is the first chart it produced. Can you spot the error(s)?

At first the chart appeared to be satisfactory — 80°F is around 27°C. But notice that the Celsius scale is truncated and it shows temperatures way below 10°C (50°F) for the Brazilian cities. The Celsius scale and the Fahrenheit scale were not aligned. A second attempt produced a correct chart.

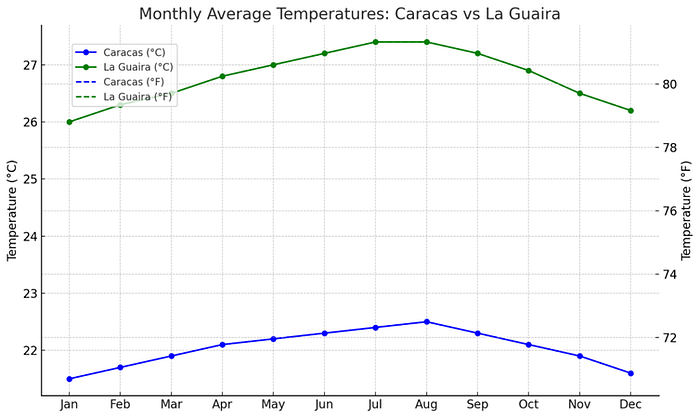

In querying ChatGPT for the same data for two more cities, this time in Venezuela, an accurate chart was produced on the first attempt. But there was still an issue with the chart. Can you find it?

A query to ChatGPT to correct the legend by showing only one colored line for each city produced a usable chart.

Sometimes it appears that ChatGPT can't count! For a humorous political post about 11 provinces of Canada joining the USA, I asked Chat to make an image of a US flag with 61 stars. Below is what it produced — around 160 stars! After several additional unsuccessful attempts I lost patience and hired a gig-worker to make the illustration.

Apparently ChatGPT and LLMs in general function poorly in understanding or calculating latitude and longitude and location questions. Several examples follow.

Sometimes ChatGPT can't count!

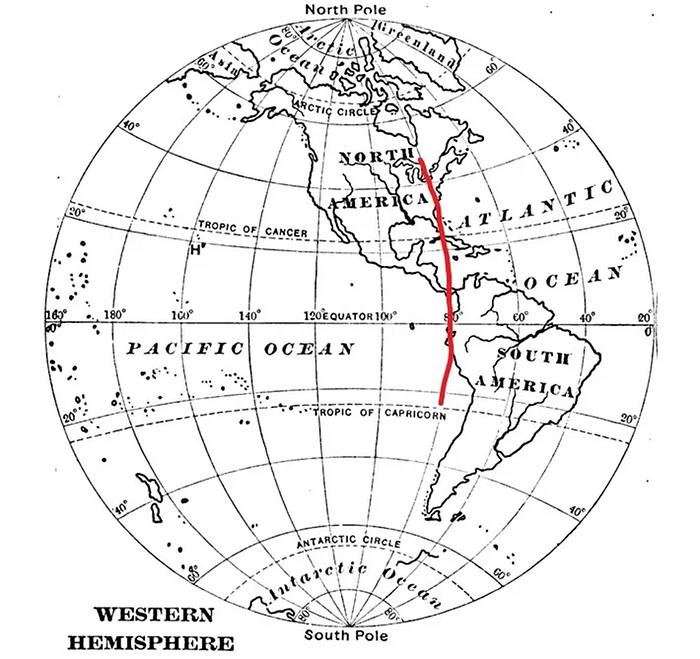

Some time ago I wrote a post titled "The Continent of Southeastern America" showing that almost all of South America is east as well as south of the United States. In looking at various US cities to illustrate how far east of the US the west coast of South America lies, I queried ChatGPT: "Would you fly over the westernmost part of Peru if you flew due south from Miami?" Below is the response.

"No, you would not fly over the westernmost part of Peru if you flew due south from Miami.

"Miami is located at approximately 80.19°W longitude. The westernmost point of Peru, Punta Pariñas, is at approximately 81.33°W longitude. Since Miami is east of this point, a flight due south from Miami would stay east of Peru, passing over parts of the Caribbean, Central America, and possibly western Colombia, but not over the westernmost part of Peru."

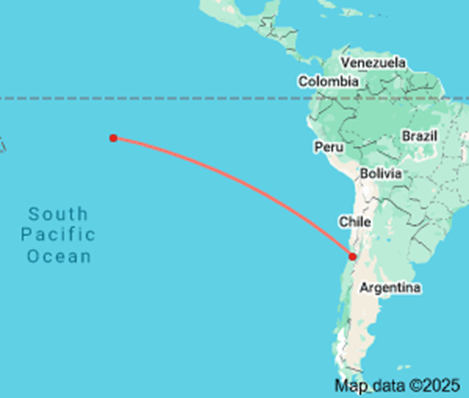

The longitudes given above are correct but the conclusion reached is simply wrong. Miami at 80°W longitude is farther east than the coast of Peru at 81°W, so, yes, you would fly over the coastal edge of Peru going due south (see map).

ChatGPT and Copilot are really bad at understanding or calculating latitude and longitude and answering location questions.

If you use ChatGPT, you know you often get an answer presented in two different formats with varying levels of detail. ChatGPT then asks which response format you prefer. For a post about South America I wanted to show a snow-capped mountain near the Equator to make a point about temperature decreasing with altitude, so I asked ChatGPT "What is the distance between Mt. Cotopaxi, Ecuador and the Equator." ChatGPT returned two responses and asked me which format I liked better. However, format was not the issue: one response calculated the distance at 70 miles and the other at 101 miles.

I received those responses about a month ago, so I asked the question again when I started writing this post about a week ago. I received this response which looks pretty accurate:

"Mount Cotopaxi in Ecuador is located at approximately 0.68° south latitude. Since 1 degree of latitude is about 111 kilometers (69 miles), the distance from Cotopaxi to the Equator is:

"0.68×111 km ≈ 75.5 km. That's about 47 miles south of the Equator in a straight north-south line."

Then I asked the same question again just before writing this article. This time ChatGPT's response was 31 miles (50 km) without showing any calculations. What happened to change the distance from 47 miles?

How far, really, is Mount Cotopaxi from the Equator? Is it 101 miles? 70? 47? 31? 28?

It is also important to note that Wikipedia states that Cotopaxi is at 0.405° south latitude, not 0.68°. That makes the distance 45 km or 28 miles. It is a pretty straight-forward question to ask: How far is Mount Cotopaxi from the Equator? So it is unsettling to receive such disparate query responses. Is it 101 miles? 70? 47? 31? 28? You try it — ask ChatGPT or some other search program and see what you get.

Another question I asked was "How far is the center of Santiago, Chile from the Pacific?" I was expecting an answer around 60 miles but ChatGPT responded 'thousands of kilometers.' (I did not record the actual amount.) It offered a map which is shown below.

I have no idea how ChatGPT picked this particular point out in the ocean to represent 'the Pacific,' and I should have phrased my question more precisely by adding 'Pacific Coast,' but keep in mind that a human would have easily understood the question.

ChatGPT 'hallucinated' a bridge.

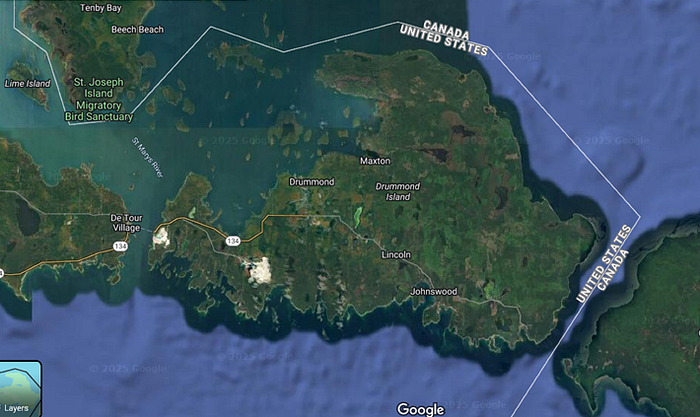

Sometimes the LLM creates an error. I was writing a post contrasting two Great Lakes islands that are part of Michigan. Isle Royale in Lake Superior is basically pristine wilderness with zero full time population while Drummond Island in Lake Huron is touristy, commercialized and a popular place to drive off-road vehicles on rugged trails. I asked ChatGPT about accessibility to the two islands to find out about ferries and air access. Here is the response:

"Isle Royale is primarily accessed by ferry, seaplane, or private boat, and it is known for its remote and isolated nature. In contrast, Drummond Island is connected to the mainland by a bridge, making it more easily accessible by road."

There is no bridge to Drummond Island! ChatGPT invented it (using LLM terminology, 'hallucinated it'), perhaps confusing the dashed lines used to show ferry routes on maps with a road. Apparently ChatGPT thought the ferry route to De Tour Village, such as on the Google map below, was a bridge.

Prize-winning gobbledygook

Here is something strange from ChatGPT unrelated to my Medium posts. I happen to be studying to learn Portuguese (which is a gendered language) and I came across a statement on the web that the names of the continents in Portuguese are all masculine. I thought, 'That can't be true.' I knew Europa was written in feminine form (ending in 'a'). So I asked ChatGPT: "In Portuguese, are the names of the continents all masculine?"

Here is ChatGPT's response. I will let you wander through this prize-winning gobbledygook without further comment (what follows is verbatim):

"Yes, in Portuguese, the names of the continents are typically treated as masculine nouns. Here are the names of the continents in Portuguese:

- África (Africa) — feminine

- América (America) — feminine

- Ásia (Asia) — feminine

- Europa (Europe) — feminine

- Oceania (Oceania) — feminine

"In Portuguese, the genders of the names of the continents are not universally masculine.

"Continents ending in -a are generally feminine, while those ending in a consonant or -o are masculine.

"For example, Africa is feminine, but Antarctica is non-binary.

"The gender classification can vary, and some countries may have neutral usage depending on context.

"Thus, the gender of the names of continents in Portuguese can differ based on their endings and usage."

This degree of confusion is unsettling to the researcher.

I'm currently working on an article for Medium about the proportion of world land area in the Northern vs. Southern Hemispheres and the proportion of world population that lives in each hemisphere. Land mass figures given on a simple Google search are generally accurate within 1% or so, but I thought it was worthwhile to double check by asking Microsoft's Copilot on its search engine, Bing: "What percent of the earth's land mass is in the Northern vs. the Southern Hemisphere?" Here is the response I received:

"Southern Hemisphere: ~32% land, ~81% water

"Northern Hemisphere: ~68% land, ~61% water"

The figures for land are approximately correct, and note that 32% + 68% = 100%. But the figures for water, 81% and 61%, make no sense. Note that the query made no reference to water.

There seems to be a tendency for LLMs, in trying to be helpful, to add things that weren't asked about and get 'in trouble.'

We saw that above when ChatGPT brought up the names of the wives of Hemingway and Dos Passos which were not part of the query, and we'll see other examples below. In a way, this may be a good thing because it reminds you that you need to assume there may be errors even in the portion of the answer that appears to be correct.

To return to the hemisphere story, I knew difficulties were ahead when I asked the question on Google about population in the two hemispheres. Figures ranged from 90% Northern —10% Southern to 80%-20%. By far, 90%-10% were the most common figures, echoed by other programs, but clearly these were approximations. A query for specific figures just for the percentage of world population in the Northern Hemisphere on Google resulted in a wide array of answers on just the first few screens:

93%; 90%; 88%-90%; 88%; 87%-90%; 87%; 85%; 80%

I took this wide variation as a challenge to make my own calculations. There are only 35 nations fully in the Southern Hemisphere (excluding island states with tiny populations) so those 35 are easy to identify and to tally their populations. The real difficulty is with eight additional nations that lie astride the Equator, two of which, Indonesia and Brazil, have very large populations. My plan was to use multiple LLM programs (ChatGPT, Copilot, Google) to estimate the population split in these eight nations. In this process, more of the challenges with LLMs capabilities were exposed. Some examples follow with the most egregious first:

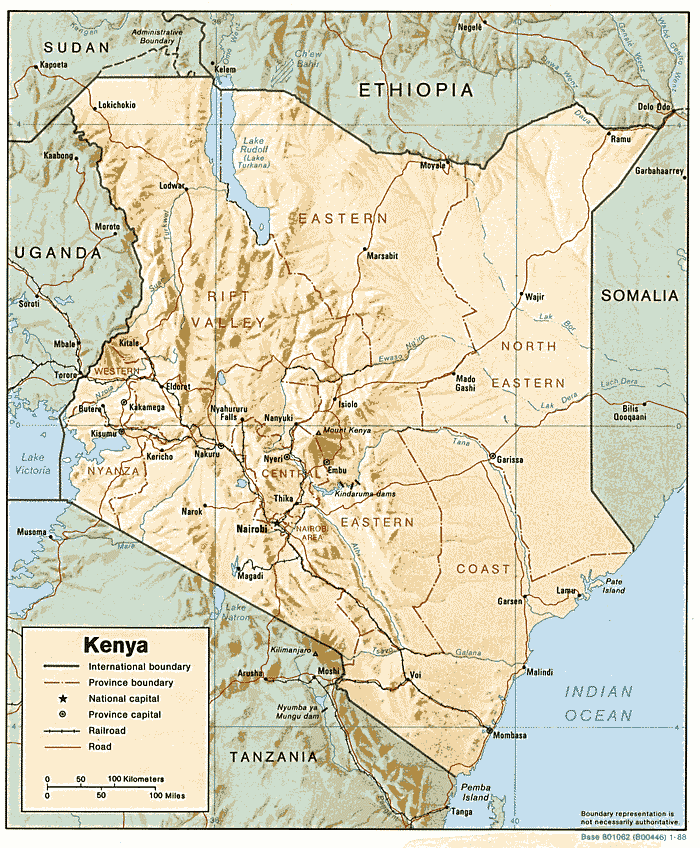

Kenya straddles the Equator so I asked "Approximately what percentage of the population of Kenya lies north vs. south of the Equator?" Here is the response I received from Copilot:

"The equator slices through central Kenya, but the majority of land and population lie north of it. Estimated split:

- Northern Hemisphere: ~85–90% of the population

- Southern Hemisphere: ~10–15%

"Key cities like Nairobi, Mombasa, Kisumu, and Eldoret are all north of the equator, reinforcing the northern dominance.

"Want to explore how these populations are distributed across urban vs rural areas, or how they've changed over time?"

This response is completely incorrect. Essentially Copilot had its figures and city locations of Kenya north or south of the Equator reversed (see map above). All but one of the four large cities mentioned (Eldoret) lie south, not north of the Equator and the population percentages given, based on what I learned later, are not only reversed but wildly inaccurate.

To give Copilot a second chance, for this current article I asked the question again, and below is the new answer I received.

"The Equator runs almost horizontally through the center of Kenya.

"Major cities like Nairobi, Mombasa, Kisumu, and Eldoret are all south of the Equator.

"However, the larger portion of Kenya's territory and population is north of the Equator, including populous counties like Kakamega, Bungoma, and Uasin Gishu.

"Estimated Population Distribution: While exact census data by hemisphere isn't published, geographic and demographic analysis suggests:

"Hemisphere Estimated Share of Kenya's Population

- North of the Equator ~60–65%

- South of the Equator ~35–40%

"This estimate is based on county-level population data and the location of the Equator relative to Kenya's administrative boundaries."

This time, Copilot correctly reversed the locations of the first three cities but it incorrectly moved Eldoret into the Southern Hemisphere. The population percentages are about right but still reversed: it should be about 65% in the Southern Hemisphere, not the Northern. You can clearly see the southern population orientation of the nation by looking at the numerous population density maps of Kenya on the web.

When I asked Copilot about the division of Brazil's population by hemisphere I was told:

"Brazil's population is ~213 million. It is the largest country entirely in the Southern Hemisphere."

Once again, the added information is incorrect. The Equator runs close to the Amazon River and there is Brazilian territory north of the equator (although this is mostly Amazon rainforest country, and not many of Brazil's people live north of the Equator). (See map at the top of this post.) Once again, the extra information that Copilot added about Brazil being entirely in the Southern Hemisphere was incorrect and a warning that the rest of the answer may be suspect (although Brazil's approximate population is correct in this case).

In summary, what is a researcher to do with so much incorrect information coming from various LLM programs? As I write, there is a lot of hype about the abilities of ChatGPT-5, the newest version. We'll see. I look forward to giving it some challenging latitude and longitude questions! And, by the way, I don't think LLMs are ready to take over the world — yet.

What is a researcher to do with so much incorrect information coming from various LLM programs? I don't think the LLMs are ready to take over the world yet.

Meanwhile I will continue to use multiple programs to compare answers. I also suggest that readers look at this recent Medium post by Edwin Lisowski about programs that automatically query multiple AI sites at one time, rather than typing in the query repeatedly, as I have been doing. Here is that post:

I hope you enjoyed this post and found it helpful. I'm a retired geography professor. Most often I write "The One-Minute Geographer." You can get an email when I publish by clicking this link (a few posts each month): https://jimwfonseca.medium.com/subscribe

Here are a few of my posts mentioned above: