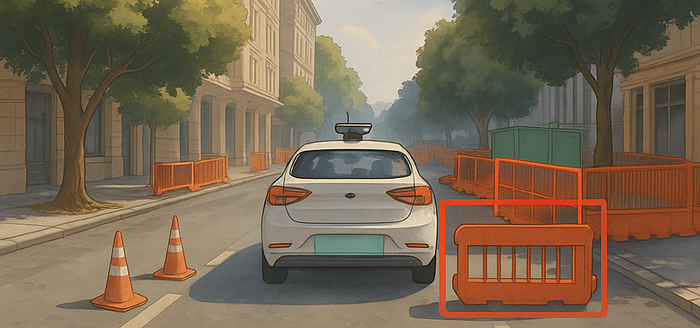

In my last year at Cruise (before we got completely absorbed into General Motors), I encountered a construction zone scenario that crystallized the fundamental limitation of current robotics AI: that robots could see isolated elements but missed the big picture.

Our L4 perception system was actually excellent at individually detecting cones and barriers (with 90%+ accuracy), but it easily missed what those objects meant together. A combination of cones and barriers forming a loose line typically signals a closed lane to human drivers — that we intuitively understand its intent to redirect traffic or block entry. But perception systems don't infer intent. If even one cone is missed or the gaps appear just wide enough, the system might interpret the scene as a drivable lane.

This kind of failure mode unfortunately had catastrophic implications, as the vehicle could drive directly into an active work zone with construction workers, open manholes, heavy machinery or debris, while simultaneously blocking traffic behind it until human safety operators intervened.

It's like being great at recognizing individual letters but terrible at reading words. They could see the pieces but couldn't grasp the bigger picture — a fundamental limitation that Field AI and Genesis AI are now betting billions will define the next decade of robotics.

This drove home a realization that the bottleneck in many critical cases isn't the sensors, but an intelligence architecture problem where the stakes are measured in human lives.

What Are Foundational Models in Robotics?

Lets first understand what makes foundational models different from traditional robotics software.

Traditional robotics relies on task-specific models trained on narrow datasets, using rule-based logic like "If cone detected → slow down." These systems predictably fail on edge cases not explicitly programmed during development.

Today's robotics AI typically blends hand-tuned heuristics with layers of neural network processing. These algorithms attempt to combine inputs from LiDAR, radar, and cameras into a coherent view of the world. One example of a very simple perception architecture would be something like:

Input: raw sensor data (LiDAR, Radar, Camera, IMU)

↓

Neural Net: detects and classifies objects, even infer scene semantics

↓

Kalman Filter: fuses those outputs to estimate precise object tracks or ego-motion

↓

Control/Planning: uses this estimate to decide what to do nextThis pipeline works within constraints, but is rigid, often heuristic heavy, sensitive to edge cases, and still relies on external sources like HD maps to stay accurate. In environments that shift or scenarios that weren't explicitly trained — like unusual object arrangements or off-road terrain — these systems struggle to generalize.

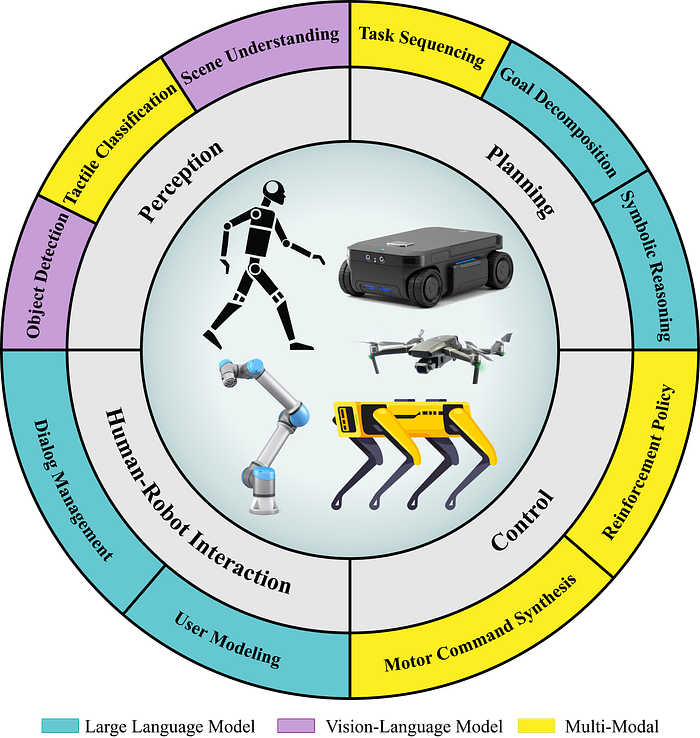

Foundational models, on the other hand, allow systems to transfer knowledge across tasks and environments in ways traditional systems cannot. Instead of building narrow models trained on specific tasks, foundational models are large and trained on vast, multimodal data like text, images, video, simulation logs, and real-world sensor streams.

Think of them as the GPT-4 of robotics that is capable of:

- Understanding LiDAR, radar, and camera inputs in a unified way

- Generalizing across tasks and environments, eg. from warehouse operations to factory floors to urban streets

- Reasoning about spatial context and object interaction

- Making safe decisions even in never-before-seen situations

This shift from modular, rule-based modules to unified models that learn patterns is what makes foundational models so exciting for robotics.

The biggest breakthrough of foundational models is their ability to reason in unfamiliar situations, known as zero-shot reasoning.

Instead of relying on exposure to every possible scenario during training, foundational models can generalize from patterns learned across vast and diverse datasets. This allows them to interpret and respond to novel situations — like that misinterpreted construction zone — not by rote memorization, but by recognizing higher-level structures and intent. It's a level of reasoning that traditional robotics systems have consistently failed to achieve.

The Rise of Foundational Models

Field AI's $405 million Series A and Genesis AI's $105 million seed raise aren't just notable for their size, but they also represent two fundamentally different approaches in foundational models to solving one of robotics' most persistent challenges: building systems that can reliably operate in complex, unstructured environments.

Field AI is taking a full-stack approach by building a physics-first, "risk-aware" platform that aims to operate in unstructured environments. Their Field Foundational Models are designed to replace the entire traditional robotics stack with a single, unified system. By fusing perception, planning, and control into a multimodal model, they believe these models can outperform today's modular pipelines by orders of magnitude.

Genesis AI, by contrast, is focused on enabling the broader ecosystem. Instead of replacing a robotics company's existing architecture, they're building plug-and-play infrastructure that can enhance it — an intelligence layer that integrates seamlessly into current workflows, much like OpenAI for robots. Using high-fidelity physics simulation alongside real-world sensor data, they aim to provide generalizable capabilities to a wide range of partners, from warehouse robotics to humanoid platforms like Figure and 1X.

The foundational models from companies like Field AI and Genesis are not just slightly better; their capabilities in perception, planning, and generalization now outstrip what old pipelines can deliver, especially in unstructured, real-world environments.

This is arguably the hallmark of a generational shift: when incremental tweaks to legacy architectures can no longer close the gap, and the cost of maintaining "the old way" outweighs the risk of adopting the new. Recent funding rounds didn't just reward technical promise, they recognized that in 2024, three powerful enablers also finally aligned:

- Edge compute became affordable and powerful enough to support real-time inference in embedded systems [CNBC report, CDOTrends]

- The scale and diversity of both real and synthetic training data reached critical mass, enabling reliable generalization beyond just known environments [MIT, Covariant]

- Regulators began accepting probabilistic, data-driven safety measures, moving beyond requirements for fully deterministic validation [ScaleVP]

These massive 2025 rounds suggest we might be hitting an inflection point where foundational models become the dominant approach in robotics. The question is whether companies still building traditional stacks can adapt fast enough, or if they'll find themselves trying to catch up to a fundamentally different paradigm.

What We're Betting On for the Next Decade

Lately, I've gotten more interested in not just building robotics systems, but also zooming out to see the bigger picture and understanding robotics as platforms and ecosystems that will shape our industry for decades.

The back-to-back fundraise by Genesis AI followed by Field AI within the last few weeks immediately caught my attention because they feel different from the usual robotics hype cycles I've seen. Here's what I've observed:

They're solving the most painful technical problems, at scale. Field AI's physics-grounded models target the fundamental failure modes I've seen in robotics systems. Their approach of embedding physics understanding and uncertainty handling directly into the model could address the (non-generalizable) decision-making that causes systems like ours to misread construction zones. Whether this actually delivers safer, more robust intelligence remains to be proven in practice, but the technical direction makes sense.

They're being smart about markets. Instead of chasing the fantasy of general-purpose household robots, these companies are starting with structured, high-stakes environments: logistics hubs, industrial sites, defense applications. Places where safety and reliability matter more than versatility, and customers will pay premium prices for step-function improvements.

They're building platforms, not products. Genesis AI is building the intelligence layer that existing companies can plug into their workflows. Field AI wants to be the brain that works across different robot bodies: arms, vehicles, walking machines. Both create network effects where every new integration makes the platform better for everyone else. Does this sound closer to an operating system?

The timing makes sense. Affordable edge compute, massive training datasets, and regulatory acceptance of probabilistic safety systems all converged in 2024, as we just discussed. Foundational models have gone from interesting research to deployment-ready technology.

As investors recognize this shift, capital is flowing toward the companies best positioned to define what comes next — unlocking robotics' trillion-dollar potential to automate and advance how humans work, live, and interact through next decade. Today, the stakes aren't just economic. It's about giving people time back, and reimagining how intelligence moves through the physical world.

Lessons from the Last Decade

Many robotics failures in the past decade stem from a web of factors: technical, financial, operational, and yes, sometimes luck. Engineering talent and capital alone aren't guarantees. Sometimes, as Cruise painfully demonstrated, leadership choices, culture, and risk mismanagement — disregarding transparency and safety — can unravel even the most promising advances.

Cruise (shutdown 2025) had billions in funding, world-class talent, and advanced AI. I saw firsthand how our technology performed on par with Waymo when we rolled out to the public in 2022. But a pedestrian incident in late 2023 — followed by withheld information — led to permit suspensions and intense regulatory scrutiny. By 2025, Cruise was shut down and absorbed back into GM. Everyone in my org was laid off. Up close, it didn't feel like a failure of technology — it was a failure of leadership decisions, transparency, and responsible execution. Robotics success demands more than innovation; it requires integrity, regulatory alignment, and clear communication at every level.

Broadening the view, others in the field faced similar fates. The SF robotics world is quite small, and it's surreal to realize I've crossed paths with some of these founders and early engineers. Rethink Robotics (shutdown 2018) brought collaborative robots into the spotlight but misjudged product readiness and market timing. Anki, founded by Boris Sofman (shutdown 2019), created joyful consumer robots but couldn't sustain a viable business model. Starsky Robotics (shutdown 2020) made a bold bet on remote-driven trucks but underestimated the regulatory hurdles and the sheer complexity of validating edge-case safety at scale.

Meanwhile, survivors like Zipline, Tesla, and Waymo succeeded — so far — by marrying innovative technology with practical, resilient business models tailored to real-world demands. Zipline carved out a clear niche by delivering urgent medical supplies in geographies where speed and reliability were critical. Tesla embedded autonomy into cars people already wanted, using its vast fleet data to iterate quickly and reduce costs through vertical integration. Waymo took a cautious, safety-first path, focusing on highly mapped environments and earning regulatory trust through rigorous testing.

Of course, strategy alone doesn't tell the whole story. Timing, market readiness, capital, and a bit of luck played their part too. In an industry where even strong ideas can falter, it's often the combination of execution, environment, and circumstance that separates those who scale from those who stall. And more importantly, effective leadership and risk management are no longer optional extras here, they are the foundation on which the next generation of robotics innovation will be built.

What about the technology itself?

This is where foundational models offer a real path forward, beyond just a "better AI." By unifying sensing, reasoning, and action within a physics-grounded, adaptable framework, foundational models promise greater resilience to edge cases and deployment failures. Trained on massive real and synthetic data, they enable safer policies to be stress-tested in simulation, reducing real-world risk and potentially shaving years off validation cycles [International Journal of Robotics Research, 2025].

Moreover, foundational architectures encourage building unified platforms over non-generalizable, bespoke stacks, spreading fixed costs across broader markets and easing pressure on unit economics. But big questions remain: How do we balance generalization with task-specific specialization? Validate safety without decade-long trials? Build leadership cultures that prioritize both transparency and measured risk-taking?

In short, foundational models don't solve everything. But they tilt the equation toward safer, more scalable, and economically viable robotics. The next chapter depends as much on leadership innovation as on breakthroughs in AI, a uniquely hard, but promising frontier [ICRA, 2025].

Concluding Thoughts

We stand at a unique moment in robotics history. Over half a billion flowing into Field AI and Genesis AI isn't just venture capital chasing the next shiny object — it's a recognition that we've finally assembled the pieces needed to solve robotics' fundamental intelligence problem.

The construction zone scenario that haunted my Cruise days was more than a technical problem to solve, but also represents a broader challenge of building machines that can truly reason and act with context in the messy, unpredictable real world. Real-world generalization — the ability for robots to understand complex environments and adapt — remains one of the hardest problems in robotics.

What gives me optimism is that foundational models may finally close this gap. By unifying sensing, reasoning, and action within physics-grounded frameworks, they offer the adaptability needed to navigate edge cases and reduce costly deployment failures. Trained on massive real and synthetic datasets, they promise shorter validation cycles and more robust behavior long before a robot hits the field. And by replacing brittle, bespoke stacks with unified platforms, they enable scalability and healthier unit economics across industries.

The next AI wave won't just write code or compose poetry. It will pack boxes, fix solar panels, clean up disaster zones, and help rebuild our cities. I hope the companies leading this transformation pair cutting-edge AI with principled leadership, safety-first cultures, and disciplined execution.

I hope this is more than a technological leap — a step-change not just toward smarter machines, but toward systems grounded in the humanity of their builders and the physical realities of the world they serve.

Moorissa is an AI and robotics engineer with nearly a decade of experience leading autonomous systems at Tesla, Cruise, and NASA. She's passionate about shaping the next generation of intelligent machines — systems that are not only technically rigorous and scalable, but also grounded in societal responsibility.