This is part two of Demystify Knowledge RAG frameworks. In our previous writing, we explored Knowledge RAG concepts, Microsoft GraphRAG data ingestion, and query capability. In this writing, we are going to continue exploring the use of SciPhi-AI/R2R, an open-source RAG engine, to perform automatic knowledge graph construction on file ingestion. Here, we explore R2R/GraphRAG capabilities and then take a look at a few approaches on converting unstructured text into structured data.

What is R2R?

RAG to Riches (R2R) is a tool that provides powerful document ingestion, search, and RAG capabilities with tone of useful features, including:

- Document Ingestion and Management

- LLM-Power Search (Vector, Hybrid and Knowledge Graph)

- User Management

- Observability and Analytics

- Dashboard UI

R2R offer Hypothetical Document Embedding (HyDE)- an advance techniques that significantly enhances RAG performance. By chaining multi-search pipelines result such as keyword search, vector search, similarity search into RAG for final response generation. Additionally, it support search ranking, local RAG, multiple LLM and advance RAG.

R2R / Local GraphRAG

in R2R Knowledge graph are created with local systems using the newly rleased Triplex Model.

Triplex — SOTA LLM for Knowledge Graphs

An open-source model for 10x cheaper knowledge graph construction. see here for more details: https://www.sciphi.ai/blog/triplex

The model converts simple sentences into lists of "semantic triples," a format for expressing graph data, see example below:

input text-1:

Paris is the capital of France`

# Inputs

# Entity Types <- CITY, COUNTRY

# Relationships <- CAPITAL_OF, LOCATED_IN

# Text <- Paris is the capital of France

# Output -> (subject > predicate > object)

CITY: Paris > CAPITAL_OF > COUNTRY: France

CITY: Paris > LOCATED_IN > COUNTRY: FranceInput text-2:

Vincent van Gogh, a post-impressionist painter, created "The Starry Night" in 1889. This iconic artwork, with its swirling clouds, brilliant stars, and crescent moon, exemplifies the artist's unique style and emotional intensity. Van Gogh's bold use of color and expressive brushstrokes influenced many subsequent art movements, including Expressionism and Fauvism.`

# Inputs

# Entity Types <- ARTIST, ARTWORK, ART_MOVEMENT

# Relationships <- CREATED_BY, BELONGS_TO_MOVEMENT

# Text <- Vincent van Gogh, a post-impressionist painter, created "The Starry Night" in 1889. This iconic artwork, with its swirling clouds, brilliant stars, and crescent moon, exemplifies the artist's unique style and emotional intensity. Van Gogh's bold use of color and expressive brushstrokes influenced many subsequent art movements, including Expressionism and Fauvism.

# Output -> (subject > predicate > object)

ARTIST:Vincent van Gogh > BELONGS_TO_MOVEMENT > ART_MOVEMENT:post-impressionist

ARTWORK:The Starry Night > CREATED_BY > ARTIST:Vincent van Gogh

ARTIST:Vincent van Gogh > BELONGS_TO_MOVEMENT > ART_MOVEMENT:Expressionism

ARTIST:Vincent van Gogh > BELONGS_TO_MOVEMENT > ART_MOVEMENT:FauvismTo configure R2R for automatic extract relationships and build knowledge graph during input file ingestion. We need some setup a local LLM, install R2R tools and get some test data.

What specific problem does Triplex Model try to solve?

Specially trained triplet extraction model achieves results comparable to gpt-4o result a significant reduction in cost and outperformed use of zero-shot prompting a technique to improve knowledge graph query quality.

Next, let's setup local environment for Local RAG.

Setup local RAG environment

1. Get Test Data

Let's start with some data, for data ingestion comparison reason I choose the same input data from Microsoft GraphRAG tutorial.

curl https://www.gutenberg.org/cache/epub/24022/pg24022.txt > ./book.txt2. Get Ollama and LLM Models

Assume you have Ollama installed locally. if not follow installation instruction here:

To run a local RAG, we need following models

# pull triplex

ollama pull sciphi/triplex

# pull embedding / RAG LLMs pick you prefer one here

ollama pull llama3

ollama pull mxbai-embed-large

# leave running in separate terminal if you don't have ollama running as a service

ollama serveTo verity Ollama is running local check the port `11434` is listening

❯ netstat -lntp | grep 11434

tcp6 0 0 :::11434 :::* LISTEN - ❯ netstat -lntp so after download your local system should have all models, like this

❯ ollama list

NAME ID SIZE MODIFIED

mxbai-embed-large:latest 468836162de7 669 MB 5 hours ago

sciphi/triplex:latest aeee13bf7e2e 2.4 GB 5 hours ago

mistral:instruct 2ae6f6dd7a3d 4.1 GB 5 hours ago

gemma2:latest ff02c3702f32 5.4 GB 6 hours ago

nomic-embed-text:latest 0a109f422b47 274 MB 6 hours ago

llama3:latest 365c0bd3c000 4.7 GB 6 hours ago3. Clone R2R git repo and installation

gir clone https://github.com/SciPhi-AI/R2R.git

cd R2R

pip install .4. Update R2R Docker Image with local Embedding support

Open `Dockerfile` in edit mode insert a line `RUN pip install sentence-transformers && pip install einops` before `COPY r2r /app/r2r`

This step install sentence-transformer libraries into the containers to fix an error complains sentence-transformer library not available on the out-of-box image.

see full `Dockerfile`

FROM python:3.10-slim AS builder

# Install system dependencies

RUN apt-get update && apt-get install -y --no-install-recommends \

gcc g++ musl-dev curl libffi-dev gfortran libopenblas-dev \

&& apt-get clean && rm -rf /var/lib/apt/lists/*

WORKDIR /app

RUN pip install --no-cache-dir poetry

# Copy the dependencies files

COPY pyproject.toml poetry.lock* ./

# Install the dependencies, including gunicorn and uvicorn

RUN poetry config virtualenvs.create false \

&& poetry install --no-dev --no-root \

&& pip install --no-cache-dir gunicorn uvicorn

# Create the final image

FROM python:3.10-slim

WORKDIR /app

# Copy the installed packages from the builder

COPY --from=builder /usr/local/lib/python3.10/site-packages /usr/local/lib/python3.10/site-packages

COPY --from=builder /usr/local/bin /usr/local/bin

# <<<NEW LINE ADDED>>>

RUN pip install sentence-transformers && pip install einops

# Copy the application and config

COPY r2r /app/r2r

COPY config.json /app/config.json

# Expose the port

EXPOSE 8000

# Run the application

CMD ["uvicorn", "r2r.main.app_entry:app", "--host", "0.0.0.0", "--port", "8000"]R2R following a module design approach for LLM, Knowledge Graph, Text Embedding, Vector Store this give us flexibilities to select our own prefer provider. However, Neo4J is the only supported provider at this movement.

5. Update `settings.json` below for details

here we configure

LLM Provider — Local Ollama as provider for LLM chat completion with LLAMA3 model.

"completions": {

"provider": "litellm",

"generation_config": {

"model": "ollama/llama3",

"temperature": 0.1,

"top_p": 1.0,

"top_k": 100,

"max_tokens_to_sample": 1024,

"stream": false,

"functions": null,

"skip_special_tokens": false,

"stop_token": null,

"num_beams": 1,

"do_sample": true,

"generate_with_chat": false,

"add_generation_kwargs": {},

"api_base": "http://localhost:11434"

}

},Embedding Provider — HF sentence-transformers models `mixedbread-ai/mxbai-embed-large-v1` base models with dimension 768 and enable rerank model.

"embedding": {

"provider": "sentence-transformers",

"base_model": "mixedbread-ai/mxbai-embed-large-v1",

"base_dimension": 768,

"rerank_model": "jinaai/jina-reranker-v1-turbo-en",

"rerank_dimension": 384,

"rerank_transformer_type": "CrossEncoder",

"batch_size": 32,

"text_splitter": {

"type": "recursive_character",

"chunk_size": 512,

"chunk_overlap": 20

}

},here's a full list of available text embedding models.

TextEmbeddingModel = Literal[

"all-mpnet-base-v2",

"multi-qa-mpnet-base-dot-v1",

"all-distilroberta-v1",

"all-MiniLM-L12-v2",

"multi-qa-distilbert-cos-v1",

"mixedbread-ai/mxbai-embed-large-v1",

"multi-qa-MiniLM-L6-cos-v1",

"paraphrase-multilingual-mpnet-base-v2",

"paraphrase-albert-small-v2",

"paraphrase-multilingual-MiniLM-L12-v2",

"paraphrase-MiniLM-L3-v2",

"distiluse-base-multilingual-cased-v1",

"distiluse-base-multilingual-cased-v2",

]Knowledge Graph Provider — Neo4J and for knowledge extraction we use triplex model serve by Ollama (ollama/sciphi/triplex).

"kg": {

"provider": "neo4j",

"batch_size": 1,

"text_splitter": {

"type": "recursive_character",

"chunk_size": 1024,

"chunk_overlap": 0

},

"kg_extraction_prompt": "zero_shot_ner_kg_extraction",

"kg_extraction_config": {

"model": "ollama/sciphi/triplex",

"temperature": 0.1,

"top_p": 1.0,

"top_k": 100,

"max_tokens_to_sample": 1024,

"stream": false,

"functions": null,

"skip_special_tokens": false,

"stop_token": null,

"num_beams": 1,

"do_sample": true,

"generate_with_chat": false,

"add_generation_kwargs": {},

"api_base": null

}

},Vector Store — use PG Vector

"vector_database": {

"provider": "pgvector",

"collection_name": "demo2vecs",

"base_dimension": 8192

}

}Here's a full `Settings.json` file

{

"app": {

"max_logs_per_request": 100,

"max_file_size_in_mb": 32

},

"completions": {

"provider": "litellm",

"generation_config": {

"model": "ollama/llama3",

"temperature": 0.1,

"top_p": 1.0,

"top_k": 100,

"max_tokens_to_sample": 1024,

"stream": false,

"functions": null,

"skip_special_tokens": false,

"stop_token": null,

"num_beams": 1,

"do_sample": true,

"generate_with_chat": false,

"add_generation_kwargs": {},

"api_base": "http://localhost:11434"

}

},

"embedding": {

"provider": "sentence-transformers",

"base_model": "mixedbread-ai/mxbai-embed-large-v1",

"base_dimension": 768,

"rerank_model": "jinaai/jina-reranker-v1-turbo-en",

"rerank_dimension": 384,

"rerank_transformer_type": "CrossEncoder",

"batch_size": 32,

"text_splitter": {

"type": "recursive_character",

"chunk_size": 512,

"chunk_overlap": 20

}

},

"kg": {

"provider": "neo4j",

"batch_size": 1,

"text_splitter": {

"type": "recursive_character",

"chunk_size": 1024,

"chunk_overlap": 0

},

"kg_extraction_prompt": "zero_shot_ner_kg_extraction",

"kg_extraction_config": {

"model": "ollama/sciphi/triplex",

"temperature": 0.1,

"top_p": 1.0,

"top_k": 100,

"max_tokens_to_sample": 1024,

"stream": false,

"functions": null,

"skip_special_tokens": false,

"stop_token": null,

"num_beams": 1,

"do_sample": true,

"generate_with_chat": false,

"add_generation_kwargs": {},

"api_base": null

}

},

"eval": {

"provider"{

"app": {

"max_logs_per_request": 100,

"max_file_size_in_mb": 32

},

"completions": {

"provider": "litellm",

"generation_config": {

"model": "ollama/llama3",

"temperature": 0.1,

"top_p": 1.0,

"top_k": 100,

"max_tokens_to_sample": 1024,

"stream": false,

"functions": null,

"skip_special_tokens": false,

"stop_token": null,

"num_beams": 1,

"do_sample": true,

"generate_with_chat": false,

"add_generation_kwargs": {},

"api_base": "http://localhost:11434"

}

},

"embedding": {

"provider": "sentence-transformers",

"base_model": "mixedbread-ai/mxbai-embed-large-v1",

"base_dimension": 768,

"batch_size": 32,

"text_splitter": {

"type": "recursive_character",

"chunk_size": 512,

"chunk_overlap": 20

}

},

"kg": {

"provider": "neo4j",

"batch_size": 1,

"text_splitter": {

"type": "recursive_character",

"chunk_size": 1024,

"chunk_overlap": 0

},

"kg_extraction_prompt": "zero_shot_ner_kg_extraction",

"kg_extraction_config": {

"model": "ollama/sciphi/triplex",

"temperature": 0.1,

"top_p": 1.0,

"top_k": 100,

"max_tokens_to_sample": 1024,

"stream": false,

"functions": null,

"skip_special_tokens": false,

"stop_token": null,

"num_beams": 1,

"do_sample": true,

"generate_with_chat": false,

"add_generation_kwargs": {},

"api_base": null

}

},

"eval": {

"provider": "None"

},

"ingestion": {

"excluded_parsers": [

"mp4"

]

},

"logging": {

"provider": "local",

"log_table": "logs",

"log_info_table": "log_info"

},

"prompt": {

"provider": "local"

},

"vector_database": {

"provider": "pgvector",

"collection_name": "demo2vecs",

"base_dimension": 8192

}

}: "None"

},

"ingestion": {

"excluded_parsers": [

"mp4"

]

},

"logging": {

"provider": "local",

"log_table": "logs",

"log_info_table": "log_info"

},

"prompt": {

"provider": "local"

},

"vector_database": {

"provider": "pgvector",

"collection_name": "demo2vecs",

"base_dimension": 8192

}

}6. Build r2r:v1 image locally

next, run a docker build by issue this command

docker build -t r2r:v1 .It may take couple minutes to complete the build. on completion you should see image like below:

❯ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

2r2 v1 595ce893e018 6 hours ago 8.65GB7. Let's Customize the compose.yaml to include neo4j

To keep things easier to manage, let's incorporate the neo4j into a single docker-compose file. here's the full compose.yamlfile.

networks:

r2r-network:

name: r2r-network

driver: bridge

attachable: true

ipam:

driver: default

config:

- subnet: 172.28.0.0/16

labels:

- "com.docker.compose.recreate=always"

services:

neo4j:

image: neo4j:5.21.0

ports:

- "7474:7474" # HTTP

- "7687:7687" # Bolt

environment:

- NEO4J_AUTH=${NEO4J_AUTH:-neo4j/ineedastrongerpassword}

- NEO4J_dbms_memory_pagecache_size=${NEO4J_PAGECACHE_SIZE:-512M}

- NEO4J_dbms_memory_heap_max__size=${NEO4J_HEAP_SIZE:-512M}

- NEO4J_apoc_export_file_enabled=true

- NEO4J_apoc_import_file_enabled=true

- NEO4J_apoc_import_file_use__neo4j__config=true

- NEO4JLABS_PLUGINS=["apoc"]

- NEO4J_dbms_security_procedures_unrestricted=apoc.*

- NEO4J_dbms_security_procedures_allowlist=apoc.*

volumes:

- neo4j_data:/data

- neo4j_logs:/logs

- neo4j_plugins:/plugins

networks:

- r2r-network

healthcheck:

test: ["CMD", "neo4j", "status"]

interval: 10s

timeout: 5s

retries: 5

r2r:

image: r2r:v1

build: .

ports:

- "8000:8000"

environment:

- POSTGRES_USER=${POSTGRES_USER:-postgres}

- POSTGRES_PASSWORD=${POSTGRES_PASSWORD:-postgres}

- POSTGRES_HOST=postgres

- POSTGRES_PORT=5432

- POSTGRES_DBNAME=${POSTGRES_DBNAME:-postgres}

- POSTGRES_VECS_COLLECTION=${POSTGRES_VECS_COLLECTION:-${CONFIG_NAME:-vecs}}

- NEO4J_USER=${NEO4J_USER:-neo4j}

- NEO4J_PASSWORD=${NEO4J_PASSWORD:-ineedastrongerpassword}

- NEO4J_URL=${NEO4J_URL:-bolt://neo4j:7687}

- NEO4J_DATABASE=${NEO4J_DATABASE:-neo4j}

- OPENAI_API_KEY=${OPENAI_API_KEY:-ollama}

- OLLAMA_API_BASE=${OLLAMA_API_BASE:-http://host.docker.internal:11434}

- CONFIG_NAME=${CONFIG_NAME:-}

- CONFIG_PATH=${CONFIG_PATH:-}

- CLIENT_MODE=${CLIENT_MODE:-false}

depends_on:

- postgres

networks:

- r2r-network

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:8000/v1/health"]

interval: 10s

timeout: 5s

retries: 5

restart: on-failure

volumes:

- ${CONFIG_PATH:-/}:${CONFIG_PATH:-/app/config}

labels:

- "traefik.enable=true"

- "traefik.http.routers.r2r.rule=PathPrefix(`/api`)"

- "traefik.http.services.r2r.loadbalancer.server.port=8000"

- "traefik.http.middlewares.r2r-strip-prefix.stripprefix.prefixes=/api"

- "traefik.http.middlewares.r2r-add-v1.addprefix.prefix=/v1"

- "traefik.http.routers.r2r.middlewares=r2r-strip-prefix,r2r-add-v1,r2r-headers"

- "traefik.http.middlewares.r2r-headers.headers.customrequestheaders.Access-Control-Allow-Origin=*"

- "traefik.http.middlewares.r2r-headers.headers.customrequestheaders.Access-Control-Allow-Methods=GET,POST,OPTIONS"

- "traefik.http.middlewares.r2r-headers.headers.customrequestheaders.Access-Control-Allow-Headers=DNT,User-Agent,X-Requested-With,If-Modified-Since,Cache-Control,Content-Type,Range,Authorization"

- "traefik.http.middlewares.r2r-headers.headers.customresponseheaders.Access-Control-Expose-Headers=Content-Length,Content-Range"

r2r-dashboard:

image: emrgntcmplxty/r2r-dashboard:latest

environment:

- NEXT_PUBLIC_API_URL=http://traefik:80/api

depends_on:

- r2r

networks:

- r2r-network

labels:

- "traefik.enable=true"

- "traefik.http.routers.dashboard.rule=PathPrefix(`/`)"

- "traefik.http.services.dashboard.loadbalancer.server.port=3000"

postgres:

image: pgvector/pgvector:pg16

environment:

POSTGRES_USER: ${POSTGRES_USER:-postgres}

POSTGRES_PASSWORD: ${POSTGRES_PASSWORD:-postgres}

POSTGRES_DB: ${POSTGRES_DBNAME:-postgres}

volumes:

- postgres_data:/var/lib/postgresql/data

networks:

- r2r-network

healthcheck:

test: ["CMD-SHELL", "pg_isready -U ${POSTGRES_USER:-postgres}"]

interval: 5s

timeout: 5s

retries: 5

restart: on-failure

traefik:

image: traefik:v2.9

command:

- "--api.insecure=true"

- "--providers.docker=true"

- "--providers.docker.exposedbydefault=false"

- "--entrypoints.web.address=:80"

- "--accesslog=true"

- "--accesslog.filepath=/var/log/traefik/access.log"

ports:

- "80:80"

- "8080:8080" # Traefik dashboard

volumes:

- /var/run/docker.sock:/var/run/docker.sock:ro

networks:

- r2r-network

volumes:

postgres_data:

neo4j_data:

neo4j_logs:

neo4j_plugins:Note:

If you run into issue with default Ollama docker host resolution. you can fix this by typing you host IP directly.

Before

- OLLAMA_API_BASE=${OLLAMA_API_BASE:-http://host.docker.internal:11434}After

- OLLAMA_API_BASE=${OLLAMA_API_BASE:-http://192.168.122.152:11434}8. Launch docker-compose file services

export CONFIG_PATH=/my/graphrag/R2R/config.json

export POSTGRES_VECS_COLLECTION=demo2vecs

export TELEMETRY_ENABLED=false

docker compose up -d

## or `docker-compose up -d` depends docker version

## this step load the compose.yaml file services in R2R directoryafter successful startup, neo4j, r2r, dashoard, pp-vector and traefik service should be up and running.

❯ docker compose ps

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

r2r-neo4j-1 neo4j:5.21.0 "tini -g -- /startup…" neo4j 6 hours ago Up 6 hours (healthy) 0.0.0.0:7474->7474/tcp, :::7474->7474/tcp, 7473/tcp, 0.0.0.0:7687->7687/tcp, :::7687->7687/tcp

r2r-postgres-1 pgvector/pgvector:pg16 "docker-entrypoint.s…" postgres 6 hours ago Up 6 hours (healthy) 5432/tcp

r2r-r2r-1 r2r:v1 "uvicorn r2r.main.ap…" r2r 6 hours ago Up 6 hours (unhealthy) 0.0.0.0:8000->8000/tcp, :::8000->8000/tcp

r2r-r2r-dashboard-1 emrgntcmplxty/r2r-dashboard:latest "docker-entrypoint.s…" r2r-dashboard 6 hours ago Up 6 hours 3000/tcp

r2r-traefik-1 traefik:v2.9 "/entrypoint.sh --ap…" traefik 6 hours ago Up 6 hours 0.0.0.0:80->80/tcp, :::80->80/tcp, 0.0.0.0:8080->8080/tcp, :::8080->8080/tcp

Let's quickly look at each service

traefik listening in port 80 — this is the inbound proxy for ui services

dashboard listening in port 80,8080 — this is the playground web user interface.

pgvector listening in port 5432 — this is PostgreSQL vector database

r2r service listening in port 8000 — this the api endpoint we use to ingest data

Neo4j service listening in port 7474 — this is graphic database, we can access the UI by typing url of your `http://local PC-IP:7474` the default login username: neo4j / password: ineedastrongerpassword

Now we are ready to ingest data and perform queries

9. data ingestion

To ingest the data from command line is easier with r2r command like this

❯ r2r ingest-files book.txt

Time taken to ingest files: 775.69 seconds

{'processed_documents': ["Document 'book.txt' processed successfully."], 'failed_documents': [], 'skipped_documents': []}or ingest data via REST API endpoint `/v1/ingest_files`. see here for more details.

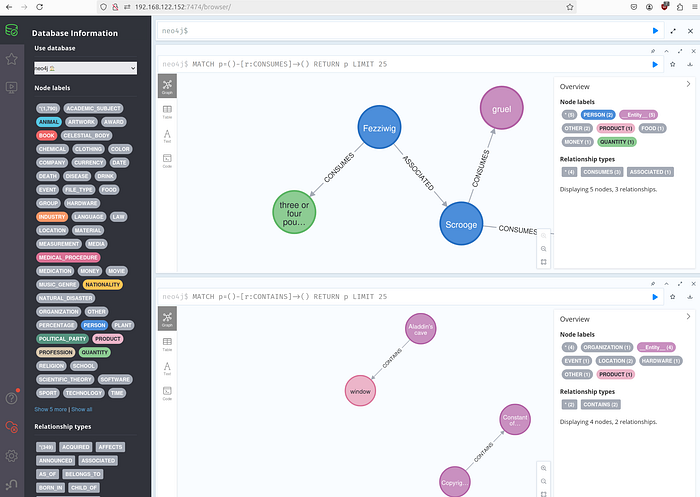

Right after ingestion we can check data in Neo4J by login the web UI. see screenshot below:

10. Query data

for perform a query in command line:

❯ r2r search --query="Who is Scrooge, and what are his main relationships?"

Vector search results:

{'id': 'b7000e91-ef36-5696-bb78-39ef11068354', 'score': 0.40468934178352356, 'metadata': {'text': "Ghost of Jacob Marley, a spectre of Scrooge's former partner in business.\r\n Joe, a marine-store dealer and receiver of stolen goods.\r\n Ebenezer Scrooge, a grasping, covetous old man, the surviving partner\r\n of the firm of Scrooge and Marley.\r\n Mr. Topper, a bachelor.\r\n Dick Wilkins, a fellow apprentice of Scrooge's.\r\n\r\n Belle, a comely matron, an old sweetheart of Scrooge's.\r\n Caroline, wife of one of Scrooge's debtors.\r\n Mrs. Cratchit, wife of Bob Cratchit.", 'title': 'book.txt', 'version': 'v0', 'chunk_order': 5, 'document_id': '7b13d958-c4b1-51c6-a355-344c5779653f', 'extraction_id': '1bce8eee-c536-571b-9333-6c0fff9965b5', 'associatedQuery': 'Who is Scrooge, and what are his main relationships?'}}

{'id': '422b31dc-0f81-52c0-83ec-acc79a280f56', 'score': 0.38365474343299866, 'metadata': {'text': 'known as Scrooge and Marley. Sometimes people new to the business called\r\nScrooge Scrooge, and sometimes Marley, but he answered to both names. It\r\nwas all the same to him.\r\n\r\nOh! but he was a tight-fisted hand at the grindstone, Scrooge! a\r\nsqueezing, wrenching, grasping, scraping, clutching, covetous old\r\nsinner! Hard and sharp as flint, from which no steel had ever struck out\r\ngenerous fire; secret, and self-contained, and solitary as an oyster.', 'title': 'book.txt', 'version': 'v0', 'chunk_order': 19, 'document_id': '7b13d958-c4b1-51c6-a355-344c5779653f', 'extraction_id': '1bce8eee-c536-571b-9333-6c0fff9965b5', 'associatedQuery': 'Who is Scrooge, and what are his main relationships?'}}

{'id': 'ba95fdc8-2bfb-5d50-823b-a6aabc02c893', 'score': 0.37344086170196533, 'metadata': {'text': "Scrooge knew the men, and looked towards the Spirit for an explanation.\r\n\r\nThe phantom glided on into a street. Its finger pointed to two persons\r\nmeeting. Scrooge listened again, thinking that the explanation might lie\r\nhere.\r\n\r\nHe knew these men, also, perfectly. They were men of business: very\r\nwealthy, and of great importance. He had made a point always of standing\r\nwell in their esteem in a business point of view, that is; strictly in a\r\nbusiness point of view.\r\n\r\n'How are you?' said one.", 'title': 'book.txt', 'version': 'v0', 'chunk_order': 273, 'document_id': '7b13d958-c4b1-51c6-a355-344c5779653f', 'extraction_id': '1bce8eee-c536-571b-9333-6c0fff9965b5', 'associatedQuery': 'Who is Scrooge, and what are his main relationships?'}}

{'id': '2427ea6a-2a1e-5572-a410-c3226ca816fe', 'score': 0.3375958204269409, 'metadata': {'text': 'your salary, and endeavour to assist your struggling family, and we will\r\ndiscuss your affairs this very afternoon, over a Christmas bowl of\r\nsmoking bishop, Bob! Make up the fires and buy another coal-scuttle\r\nbefore you dot another i, Bob Cratchit!\'\r\n\r\n[Illustration: _"Now, I\'ll tell you what, my friend," said Scrooge. "I\r\nam not going to stand this sort of thing any longer."_]\r\n\r\nScrooge was better than his word. He did it all, and infinitely more;', 'title': 'book.txt', 'version': 'v0', 'chunk_order': 350, 'document_id': '7b13d958-c4b1-51c6-a355-344c5779653f', 'extraction_id': '1bce8eee-c536-571b-9333-6c0fff9965b5', 'associatedQuery': 'Who is Scrooge, and what are his main relationships?'}}

{'id': '3412ea16-94bc-508d-8486-0f11d63c878c', 'score': 0.30906787514686584, 'metadata': {'text': "unhallowed hands shall not disturb it, or the country's done for. You\r\nwill, therefore, permit me to repeat, emphatically, that Marley was as\r\ndead as a door-nail.\r\n\r\nScrooge knew he was dead? Of course he did. How could it be otherwise?\r\nScrooge and he were partners for I don't know how many years. Scrooge\r\nwas his sole executor, his sole administrator, his sole assign, his sole\r\nresiduary legatee, his sole friend, and sole mourner. And even Scrooge", 'title': 'book.txt', 'version': 'v0', 'chunk_order': 16, 'document_id': '7b13d958-c4b1-51c6-a355-344c5779653f', 'extraction_id': '1bce8eee-c536-571b-9333-6c0fff9965b5', 'associatedQuery': 'Who is Scrooge, and what are his main relationships?'}}

{'id': '6f3fdade-5026-5a9c-b790-0766a67d13e8', 'score': 0.30757007002830505, 'metadata': {'text': 'During the whole of this time Scrooge had acted like a man out of his\r\nwits. His heart and soul were in the scene, and with his former self. He\r\ncorroborated everything, remembered everything, enjoyed everything, and\r\nunderwent the strangest agitation. It was not until now, when the bright\r\nfaces of his former self and Dick were turned from them, that he\r\nremembered the Ghost, and became conscious that it was looking full upon\r\nhim, while the light upon its head burnt very clear.', 'title': 'book.txt', 'version': 'v0', 'chunk_order': 142, 'document_id': '7b13d958-c4b1-51c6-a355-344c5779653f', 'extraction_id': '1bce8eee-c536-571b-9333-6c0fff9965b5', 'associatedQuery': 'Who is Scrooge, and what are his main relationships?'}}

{'id': 'd073c8d1-2724-5325-8e1e-dc737d3b8a2c', 'score': 0.29709339141845703, 'metadata': {'text': "to think how this old gentleman would look upon him when they met; but\r\nhe knew what path lay straight before him, and he took it.\r\n\r\n'My dear sir,' said Scrooge, quickening his pace, and taking the old\r\ngentleman by both his hands, 'how do you do? I hope you succeeded\r\nyesterday. It was very kind of you. A merry Christmas to you, sir!'\r\n\r\n'Mr. Scrooge?'\r\n\r\n'Yes,' said Scrooge. 'That is my name, and I fear it may not be pleasant\r\nto you. Allow me to ask your pardon. And will you have the goodness----'", 'title': 'book.txt', 'version': 'v0', 'chunk_order': 340, 'document_id': '7b13d958-c4b1-51c6-a355-344c5779653f', 'extraction_id': '1bce8eee-c536-571b-9333-6c0fff9965b5', 'associatedQuery': 'Who is Scrooge, and what are his main relationships?'}}

{'id': '3f264d65-6902-57bf-adbb-172ed2a760b6', 'score': 0.2940909266471863, 'metadata': {'text': 'CHARACTERS\r\n\r\n Bob Cratchit, clerk to Ebenezer Scrooge.\r\n Peter Cratchit, a son of the preceding.\r\n Tim Cratchit ("Tiny Tim"), a cripple, youngest son of Bob Cratchit.\r\n Mr. Fezziwig, a kind-hearted, jovial old merchant.\r\n Fred, Scrooge\'s nephew.\r\n Ghost of Christmas Past, a phantom showing things past.\r\n Ghost of Christmas Present, a spirit of a kind, generous,\r\n and hearty nature.\r\n Ghost of Christmas Yet to Come, an apparition showing the shadows\r\n of things which yet may happen.', 'title': 'book.txt', 'version': 'v0', 'chunk_order': 4, 'document_id': '7b13d958-c4b1-51c6-a355-344c5779653f', 'extraction_id': '1bce8eee-c536-571b-9333-6c0fff9965b5', 'associatedQuery': 'Who is Scrooge, and what are his main relationships?'}}

{'id': 'ea996f46-03b7-53ba-93a7-421df4e66438', 'score': 0.23910699784755707, 'metadata': {'text': "'Don't be angry, uncle. Come! Dine with us to-morrow.'\r\n\r\nScrooge said that he would see him----Yes, indeed he did. He went the\r\nwhole length of the expression, and said that he would see him in that\r\nextremity first.\r\n\r\n'But why?' cried Scrooge's nephew. 'Why?'\r\n\r\n'Why did you get married?' said Scrooge.\r\n\r\n'Because I fell in love.'\r\n\r\n'Because you fell in love!' growled Scrooge, as if that were the only\r\none thing in the world more ridiculous than a merry Christmas. 'Good\r\nafternoon!'", 'title': 'book.txt', 'version': 'v0', 'chunk_order': 33, 'document_id': '7b13d958-c4b1-51c6-a355-344c5779653f', 'extraction_id': '1bce8eee-c536-571b-9333-6c0fff9965b5', 'associatedQuery': 'Who is Scrooge, and what are his main relationships?'}}

{'id': '0f5e7e3d-2f7a-52af-a8d9-9e92702dae28', 'score': 0.21803855895996094, 'metadata': {'text': "afternoon!'\r\n\r\n'Nay, uncle, but you never came to see me before that happened. Why give\r\nit as a reason for not coming now?'\r\n\r\n'Good afternoon,' said Scrooge.\r\n\r\n'I want nothing from you; I ask nothing of you; why cannot we be\r\nfriends?'\r\n\r\n'Good afternoon!' said Scrooge.\r\n\r\n'I am sorry, with all my heart, to find you so resolute. We have never\r\nhad any quarrel to which I have been a party. But I have made the trial\r\nin homage to Christmas, and I'll keep my Christmas humour to the last.", 'title': 'book.txt', 'version': 'v0', 'chunk_order': 34, 'document_id': '7b13d958-c4b1-51c6-a355-344c5779653f', 'extraction_id': '1bce8eee-c536-571b-9333-6c0fff9965b5', 'associatedQuery': 'Who is Scrooge, and what are his main relationships?'}}

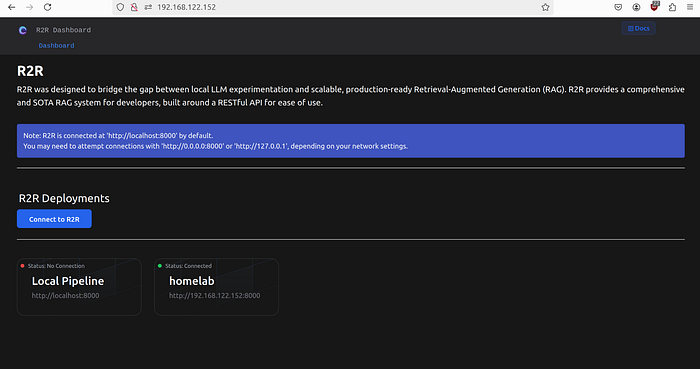

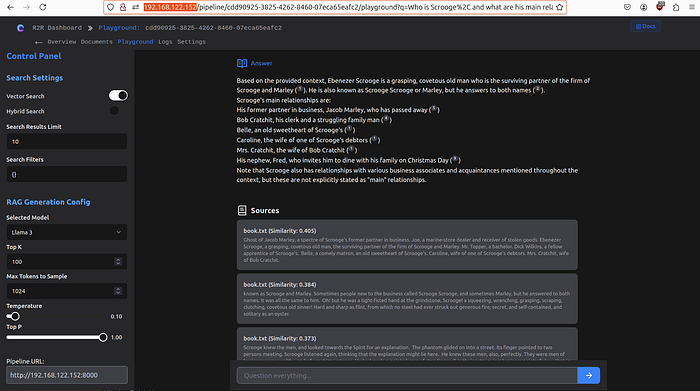

Time taken to search: 0.19 secondsSame query can also perform in dashboard: http://local-pc-ip, click connect to default pipeline. or define your own connection pipeline. In my case, I create a pipeline call homelab pointing to r2r container port 8000.

click the pipeline to launch then playground to perform query

UI query: "Who is Scrooge, and what are his main relationships?"

UI search is responsive running locally, see result below.

Based on the provided context, Ebenezer Scrooge is a grasping, covetous old man who is the surviving partner of the firm of Scrooge and Marley (1). He is also known as Scrooge Scrooge or Marley, and he answers to both names (2).

Scrooge's main relationships mentioned in the context are:

His former partner in business, Jacob Marley, who has passed away (3, 5)

Bob Cratchit, his clerk, whom he treats poorly and is concerned about providing for his family (4)

His nephew, Fred, with whom he has a strained relationship due to Scrooge's disapproval of his marriage (9)

Belle, an old sweetheart of Scrooge's who rejected him in the past (1)

Caroline, wife of one of Scrooge's debtors, and Mrs. Cratchit, wife of Bob Cratchit, whom he is concerned about providing for their families (1)

These relationships are significant to understanding Scrooge's character and his transformation throughout the story.on top the the response, the UI result include source text chunk on where the response information where extracted.

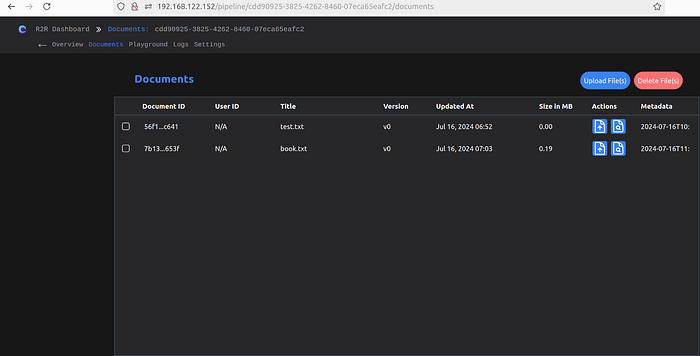

Dashboard also has a document management interface which is nice to perform view and update of the ingested documents.

Troubleshooting

Updating config.json values after building docker image

Since docker image embedding config.json during the build. see Dockerfile here:

# Copy the application and config

COPY r2r /app/r2r

COPY config.json /app/config.jsonTo pick up changes in config.json run a rebuild of the docker image as follow:

docker build -t r2r:v1 .or a better approach is externalize the config.json in the compose.yml

volumes:

- ${CONFIG_PATH:-/}:${CONFIG_PATH:-/app/config}Error: File ingestion failed with error 500

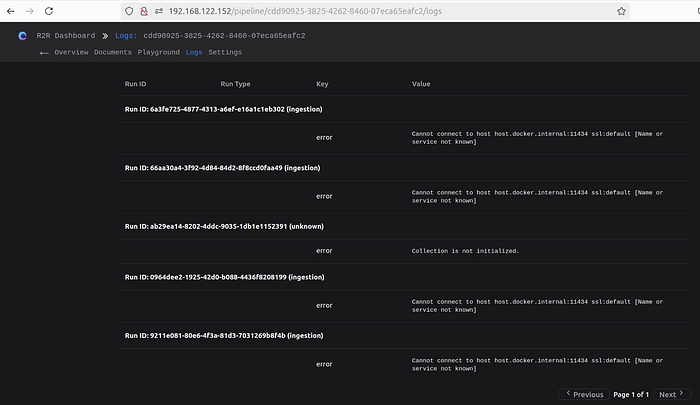

First get the error log, you can check the log from the dashboard UI-> Logs tab to see what cause the failure then take appropriate action. see screenshot below:

Alternative you can check the logs table directly inside r2R container. let's assume in `config.json` use local provider and logs table.

"logging": {

"provider": "local",

"log_table": "logs",

"log_info_table": "log_info"

},step-1 get the docker container id

❯ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

77355b89b84a emrgntcmplxty/r2r-dashboard:latest "docker-entrypoint.s…" 10 minutes ago Up 10 minutes 3000/tcp r2r-r2r-dashboard-1

715070714df1 r2r:v1 "uvicorn r2r.main.ap…" 10 minutes ago Up 10 minutes (unhealthy) 0.0.0.0:8000->8000/tcp, :::8000->8000/tcp r2r-r2r-1

bbd30057aacd pgvector/pgvector:pg16 "docker-entrypoint.s…" 10 minutes ago Up 10 minutes (healthy) 5432/tcp r2r-postgres-1

fb26373016d8 neo4j:5.21.0 "tini -g -- /startup…" 10 minutes ago Up 10 minutes (healthy) 0.0.0.0:7474->7474/tcp, :::7474->7474/tcp, 7473/tcp, 0.0.0.0:7687->7687/tcp, :::7687->7687/tcp r2r-neo4j-1

1486558121c7 traefik:v2.9 "/entrypoint.sh --ap…" 10 minutes ago Up 10 minutes 0.0.0.0:80->80/tcp, :::80->80/tcp, 0.0.0.0:8080->8080/tcp, :::8080->8080/tcp r2r-traefik-1in this case Container Id `715070714df1` is R2R instance.

step-2. Connect to the instance with command below then look for a file name `local.sqlite` that is the log database file define in setting.json

❯ docker exec -it 715070714df1 bash

root@715070714df1:/app#

root@715070714df1:/app# ls -all

total 44

drwxr-xr-x 1 root root 4096 Jul 27 12:27 .

drwxr-xr-x 1 root root 4096 Jul 27 12:25 ..

drwxr-xr-x 21 root root 4096 Jun 1 19:00 config

-rw-rw-r-- 1 root root 1893 Jul 27 12:23 config.json

-rw-r--r-- 1 root root 16384 Jul 27 12:27 local.sqlite

drwxr-xr-x 1 root root 4096 Jul 16 06:07 r2rstep-3 check log entries using sqllite3

## first install sqllite3 if not exist

root@929b8f6d2134:/app# sudo apt install sqlite3

## connect to the database

root@929b8f6d2134:/app# sqlite3 local.sqlite

SQLite version 3.40.1 2022-12-28 14:03:47

Enter ".help" for usage hints.

sqlite> .tables

log_info logs

sqlite> .schema logs

CREATE TABLE logs (

timestamp DATETIME,

log_id TEXT,

key TEXT,

value TEXT

);

sqlite> select * from logs order by timestamp desc

...> ;

2024-07-27 11:49:55|66aa30a4-3f92-4d84-84d2-8f8ccd0faa49|error|Cannot connect to host host.docker.internal:11434 ssl:default [Name or service not known]

2024-07-27 11:49:55|6a3fe725-4877-4313-a6ef-e16a1c1eb302|error|Cannot connect to host host.docker.internal:11434 ssl:default [Name or service not known]

2024-07-27 11:49:00|ab29ea14-8202-4ddc-9035-1db1e1152391|error|Collection is not initialized.

2024-07-27 11:48:48|0964dee2-1925-42d0-b088-4436f8208199|error|Cannot connect to host host.docker.internal:11434 ssl:default [Name or service not known]

2024-07-27 11:33:47|9211e081-80e6-4f3a-81d3-7031269b8f4b|error|Cannot connect to host host.docker.internal:11434 ssl:default [Name or service not known]

sqlite> next step, investigate the nature of the error. in this particulate case, the error log indicate it can not reach ollama using internal host name. see fix below.

Error: Cannot connect to host host.docker.internal:11434 ssl:default [Name or service not known]

This error can have multiple root causes

- the model name define in setting.json doesn not exit. the fix is run ollama pull model to ensure fully complete model download locally.

- change 'host.docker.internal' to the host PC actual IP.

In Summary

After experimenting with R2R, I found it to be a very useful general RAG tool, including GraphRAG, with an easy-to-use data ingestion pipeline and response search. Specially, the inclusion of Triplex model improve GraphRAG capability and reduce COST and it supports Local RAG.

In comparison with Microsoft RAG, I feel R2R is a more complete solution. However, what is lacking in R2R is some GraphRAG algorithms like community detection and summary generation, which are built-in on the Microsoft RAG framework. These features should be added to the data ingestion pipeline or in the Neo4J package.

I hope you like it.

thank again for reading!

Have a nice day!

RELATED ARTICLES

Demystify Knowledge RAG frameworks https://mychen76.medium.com/demystify-knowledge-rag-frameworks-638b59bb3bc9

Deep Dive: Transforming Text into Knowledge Graphs with LLM https://mychen76.medium.com/deep-dive-loading-unstructured-text-into-a-knowledge-graph-using-large-language-models-llms-bfc013f7dc17

Running Graph Algorithms in Neo4J and Memgraph https://mychen76.medium.com/running-community-detection-graph-algorithms-in-neo4j-and-memgraph-5b0fe4d6c40d

REFERENCE

R2R Knowledge Graph

Microsoft GraphRAG