Sparse Categorical Cross Entropy is a loss function that is commonly used in machine learning algorithms to train classification models. It is an extension of the Cross Entropy loss function that is used for binary classification problems. The sparse categorical cross-entropy is used in cases where the output labels are represented in a sparse matrix format.

In this story, I will discuss the concept of sparse categorical cross-entropy in detail. I will start by discussing the basics of loss functions and cross-entropy, then move on to explain what sparse categorical cross-entropy is and how it works. I will also discuss the advantages and disadvantages of using sparse categorical cross-entropy and some use cases where it is commonly used.

Loss Functions

In machine learning, a "loss function" is a function that measures the difference between the predicted output and the actual output of a model. The loss function is used to train the model by adjusting the weights of the parameters in the model to minimize the loss. The aim of the loss function is to find the optimal set of weights that can predict the output accurately.

There are many different types of loss functions that can be used for different types of problems. The choice of loss function depends on the type of problem and the type of output. Some of the commonly used loss functions include Mean Squared Error (MSE), Mean Absolute Error (MAE), Binary Cross-Entropy, and Categorical Cross-Entropy.

Cross-Entropy Loss Function:

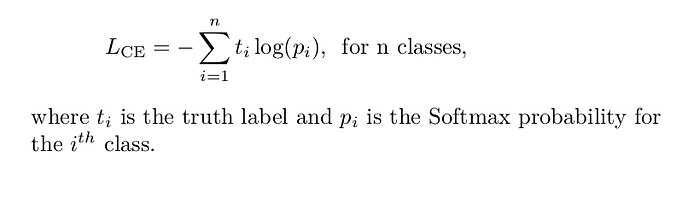

Cross-entropy is a loss function that is commonly used in classification problems. It measures the difference between the predicted probability distribution and the actual probability distribution. The cross-entropy loss function is defined as follows:

The cross-entropy loss function is used to measure how well the predicted probabilities match the actual probabilities. It is commonly used in neural networks to train models for binary classification problems.

Sparse Categorical Cross-Entropy

Sparse categorical cross-entropy is an extension of the categorical cross-entropy loss function that is used when the output labels are represented in a sparse matrix format. In a sparse matrix format, the labels are represented as a single index value rather than a one-hot encoded vector. This means that the labels are integers rather than vectors. Both categorical cross-entropy and sparse categorical cross-entropy have the same loss function as defined above. The only difference between the two is in how labels are defined.

- Categorical cross-entropy is used when we have to deal with the labels that are one-hot encoded, for example, we have the following values for 3-class classification problem [1,0,0], [0,1,0] and [0,0,1].

- In sparse categorical cross-entropy , labels are integer encoded, for example, [1], [2] and [3] for 3-class problem.

Advantages:

- Easy to interpret: The sparse categorical cross-entropy function is easy to interpret. It provides a measure of the difference between the predicted probability distribution and the true probability distribution of the classes. The function outputs a scalar value, which can be used to evaluate the performance of the model. This makes it easier for researchers and practitioners to understand the behavior of the model and make decisions based on its performance.

- Applicable to imbalanced datasets: Imbalanced datasets are a common problem in machine learning, where the number of samples in one class is significantly higher than the other classes. This can lead to bias in the model towards the majority class. Sparse categorical cross-entropy is applicable to imbalanced datasets, as it takes into account the true probability distribution of the classes. This ensures that the model is penalized for misclassifying minority classes, which can improve its performance.

- Compatible with softmax activation: The sparse categorical cross-entropy function is compatible with the softmax activation function, which is commonly used in neural networks for multiclass classification tasks. The softmax activation function transforms the output of the model into a probability distribution over the classes. This makes it easy to calculate the predicted probability distribution, which is required for the sparse categorical cross-entropy function.

- Robust to label noise: Label noise refers to errors in the labeling of the dataset, where some samples are mislabeled or have ambiguous labels. This can lead to incorrect training of the model, as it is based on incorrect labels. Sparse categorical cross-entropy is robust to label noise, as it takes into account the true probability distribution of the classes. This ensures that the model is penalized for misclassifying samples, even if they have incorrect labels.

- Versatile: Sparse categorical cross-entropy is a versatile loss function that can be used for a variety of multiclass classification tasks. It can be used in neural networks for image recognition, natural language processing, and speech recognition. This makes it a useful tool for researchers and practitioners in the field of machine learning.

Disadvantages:

- Sensitivity to outliers: Sparse categorical cross-entropy is sensitive to outliers in the dataset, which can affect its performance. Outliers are data points that are significantly different from the other data points in the dataset. These data points can have a large impact on the predicted probability distribution, which can affect the performance of the model. This can be a problem in datasets with a large number of outliers.

- Requires a large number of samples: Sparse categorical cross-entropy requires a large number of samples to train the model effectively. This is because it takes into account the true probability distribution of the classes, which requires a large number of samples to accurately estimate. This can be a problem in datasets with a small number of samples.

- Lack of regularization: Sparse categorical cross-entropy does not provide regularization, which can lead to overfitting of the model. Overfitting refers to a situation where the model is too complex, and it fits the training data too closely. This can lead to poor performance on new data. Regularization is a technique used to prevent overfitting by adding a penalty term to the loss function.